Proteins are essential to life, and understanding their structure can facilitate a mechanistic understanding of their function. Through an enormous experimental effort, the structures of around 100,000 unique proteins have been determined, but this represents a small fraction of the billions of known protein sequences. Structural coverage is bottlenecked by the months to years of painstaking effort required to determine a single protein structure. Accurate computational approaches are needed to address this gap and to enable large-scale structural bioinformatics. Predicting the 3-D structure that a protein will adopt based solely on its amino acid sequence, the structure prediction component of the ‘protein folding problem’, has been an important open research problem for more than 50 years. Despite recent progress, existing methods fall far short of atomic accuracy, especially when no homologous structure is available. Here we provide the first computational method that can regularly predict protein structures with atomic accuracy even where no similar structure is known. We validated an entirely redesigned version of our neural network-based model, AlphaFold, in the challenging 14th Critical Assessment of protein Structure Prediction (CASP14), demonstrating accuracy competitive with experiment in a majority of cases and greatly outperforming other methods. Underpinning the latest version of AlphaFold is a novel machine learning approach that incorporates physical and biological knowledge about protein structure, leveraging multi-sequence alignments, into the design of the deep learning algorithm.

Google AI and DeepMind News and Discussions

Re: Google DeepMind News and Discussions

"Highly accurate protein structure prediction with AlphaFold"

And remember my friend, future events such as these will affect you in the future

Re: Google DeepMind News and Discussions

And remember my friend, future events such as these will affect you in the future

Re: Google DeepMind News and Discussions

DeepMind says it will release the structure of every protein known to science

Back in December 2020, DeepMind took the world of biology by surprise when it solved a 50-year grand challenge with AlphaFold, an AI tool that predicts the structure of proteins. Last week the London-based company published full details of that tool and released its source code.

Now the firm has announced that it has used its AI to predict the shapes of nearly every protein in the human body, as well as the shapes of hundreds of thousands of other proteins found in 20 of the most widely studied organisms, including yeast, fruit flies, and mice. The breakthrough could allow biologists from around the world to understand diseases better and develop new drugs.

And remember my friend, future events such as these will affect you in the future

Re: Google DeepMind News and Discussions

Generally capable agents emerge from open-ended play

starspawn0:

In recent years, artificial intelligence agents have succeeded in a range of complex game environments. For instance, AlphaZero beat world-champion programs in chess, shogi, and Go after starting out with knowing no more than the basic rules of how to play. Through reinforcement learning (RL), this single system learnt by playing round after round of games through a repetitive process of trial and error. But AlphaZero still trained separately on each game — unable to simply learn another game or task without repeating the RL process from scratch. The same is true for other successes of RL, such as Atari, Capture the Flag, StarCraft II, Dota 2, and Hide-and-Seek. DeepMind’s mission of solving intelligence to advance science and humanity led us to explore how we could overcome this limitation to create AI agents with more general and adaptive behaviour. Instead of learning one game at a time, these agents would be able to react to completely new conditions and play a whole universe of games and tasks, including ones never seen before.

Today, we published "Open-Ended Learning Leads to Generally Capable Agents," a preprint detailing our first steps to train an agent capable of playing many different games without needing human interaction data. We created a vast game environment we call XLand, which includes many multiplayer games within consistent, human-relatable 3D worlds. This environment makes it possible to formulate new learning algorithms, which dynamically control how an agent trains and the games on which it trains. The agent’s capabilities improve iteratively as a response to the challenges that arise in training, with the learning process continually refining the training tasks so the agent never stops learning. The result is an agent with the ability to succeed at a wide spectrum of tasks — from simple object-finding problems to complex games like hide and seek and capture the flag, which were not encountered during training. We find the agent exhibits general, heuristic behaviours such as experimentation, behaviours that are widely applicable to many tasks rather than specialised to an individual task. This new approach marks an important step toward creating more general agents with the flexibility to adapt rapidly within constantly changing environments.

starspawn0:

This is one of the tasks I wrote once before it would be nice to see brain data applied to. In an old post of mine, I wondered: could one use brain data to build a game-playing agent that can do decently on a new game out-of-the-box? You see, humans can be shown a new game, and if they have some game-playing experience, can do an ok job in the first try -- e.g. they won't die immediately; won't run into enemies; will predict where the enemies are moving, using physical commonsense reasoning; infer what a goal might be; and so on. That's a much, much harder problem than training an agent to solve any particular game. It requires something closer to AGI than we've seen in game-playing AIs in the past.

I would say this is as much a breakthrough and shock as GPT-3 (and GPT-2). Scale this up and use more real-world tasks (instead of games), and you could probably make something that genuinely seems intelligent, if put in a robot body and allowed to interact with the world. Add in some language capability, and you're going to have something that needs to be watched carefully!

....

The success of this work will lead to even larger attempts by other groups. The perceived risk in attempting something like this is now a lot lower. Before this work, some teams might have had the same idea, but then thought, "Ahh... probably won't work. And if we try, we'll have wasted large numbers of hours and millions of dollars, with little to show for it, except marginally better game-playing agents. Could we really make this work?..." and the doubt and skepticism set in.

And remember my friend, future events such as these will affect you in the future

Re: Google DeepMind News and Discussions

And remember my friend, future events such as these will affect you in the future

Re: Google DeepMind News and Discussions

And remember my friend, future events such as these will affect you in the future

Re: Google DeepMind News and Discussions

And remember my friend, future events such as these will affect you in the future

Re: Google DeepMind News and Discussions

How DeepMind Is Reinventing the Robot

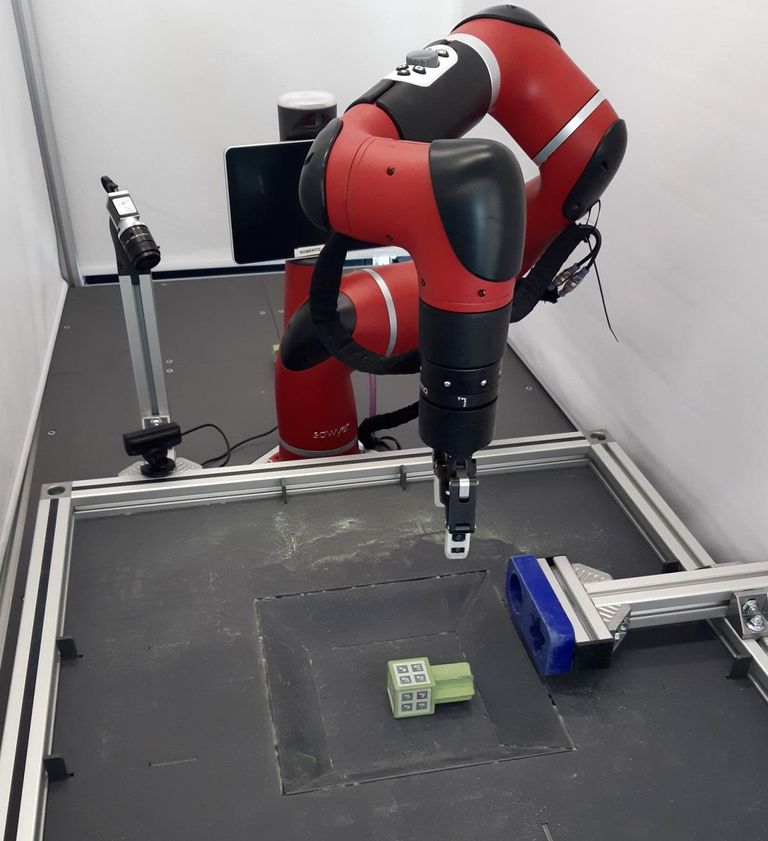

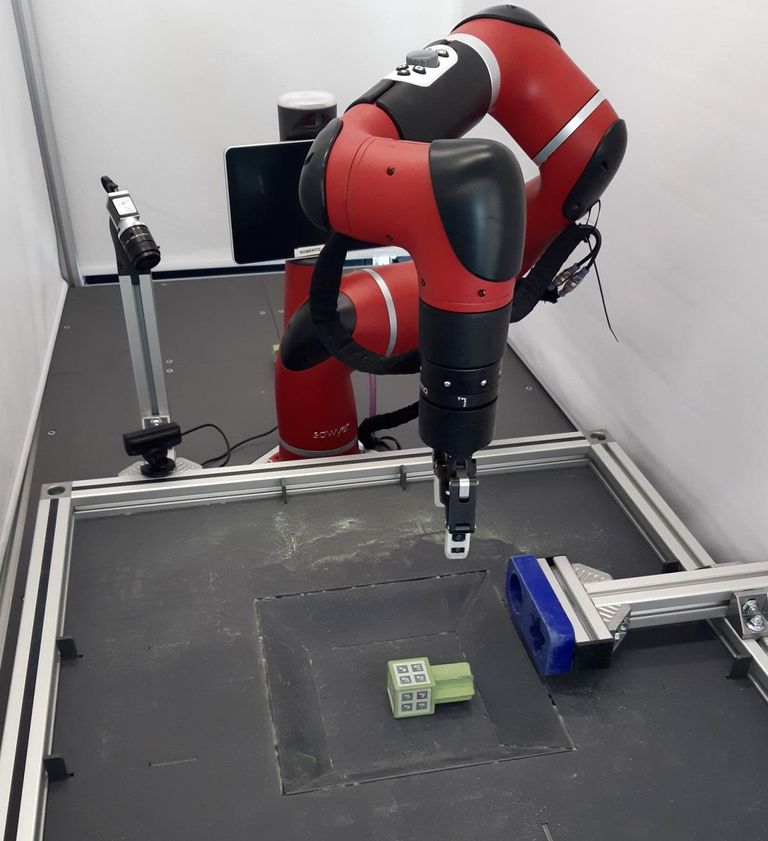

Pushing a star-shaped peg into a star-shaped hole may seem simple, but it was a minor triumph for one of DeepMind's robots.

DEEPMIND

ARTIFICIAL INTELLIGENCE has reached deep into our lives, though you might be hard pressed to point to obvious examples of it. Among countless other behind-the-scenes chores, neural networks power our virtual assistants, make online shopping recommendations, recognize people in our snapshots, scrutinize our banking transactions for evidence of fraud, transcribe our voice messages, and weed out hateful social-media postings. What these applications have in common is that they involve learning and operating in a constrained, predictable environment.

But embedding AI more firmly into our endeavors and enterprises poses a great challenge. To get to the next level, researchers are trying to fuse AI and robotics to create an intelligence that can make decisions and control a physical body in the messy, unpredictable, and unforgiving real world. It's a potentially revolutionary objective that has caught the attention of some of the most powerful tech-research organizations on the planet. "I'd say that robotics as a field is probably 10 years behind where computer vision is," says Raia Hadsell, head of robotics at DeepMind, Google's London-based AI partner. (Both companies are subsidiaries of Alphabet.)

Even for Google, the challenges are daunting. Some are hard but straightforward: For most robotic applications, it's difficult to gather the huge data sets that have driven progress in other areas of AI. But some problems are more profound, and relate to longstanding conundrums in AI. Problems like, how do you learn a new task without forgetting the old one? And how do you create an AI that can apply the skills it learns for a new task to the tasks it has mastered before?

Success would mean opening AI to new categories of application. Many of the things we most fervently want AI to do—drive cars and trucks, work in nursing homes, clean up after disasters, perform basic household chores, build houses, sow, nurture, and harvest crops—could be accomplished only by robots that are much more sophisticated and versatile than the ones we have now.

Beyond opening up potentially enormous markets, the work bears directly on matters of profound importance not just for robotics but for all AI research, and indeed for our understanding of our own intelligence.

Let's start with the prosaic problem first...

Pushing a star-shaped peg into a star-shaped hole may seem simple, but it was a minor triumph for one of DeepMind's robots.

DEEPMIND

And remember my friend, future events such as these will affect you in the future

Re: Google DeepMind News and Discussions

DeepMind’s latest trick? Predicting the weather

After mastering Go and StarCraft, DeepMind is taking its AI into another challenging arena: predicting the weather. The Alphabet-owned company has been quietly working with the Met Office over the past few years, and today, they report the fruits of their collaboration in the journal Nature. In short, DeepMind has devised a new machine learning model that can predict whether it’s going to rain within the next couple of hours.

This type of weather forecasting is known as precipitation nowcasting: predicting rainfall on a very short timescale, up to two hours before a downpour. Today's weather forecasts are pretty nifty at predicting rain further ahead in the future, from six hours to about a couple weeks ahead. But any sooner than that is where blind spots appear, and that’s where machine learning can bridge a much-needed gap.

A deluge of rain isn’t just an annoyance for someone who just got their hair done. Being able to foretell heavy rain in advance is crucial for everyday but important situations such as road safety, air traffic control and early-warning systems for flooding. The unfolding climate crisis also means that extreme weather events, such as heavy rainstorms or flooding, will only become more frequent. The ability to predict rainfall better and faster is pretty important for making quick decisions in these situations: halting a train or evacuating a building, for example.

The Met Office relies on radar imagery to predict when the heavens will open up. Radar works by sending a beam into the atmosphere, and then timing how long it takes to reflect, which tells you how much moisture there is in the atmosphere. The more moisture there, the more rain there will be. The data are then sent to the Met Office HQ, where they are processed to get a picture of the precipitation over the UK. DeepMind’s model was trained on radar imagery from the UK between the years 2016 and 2018, to then be able to reliably predict what will happen in an hour or two into the future.

And remember my friend, future events such as these will affect you in the future