GPU and CPU news and discussions

The truth about CPU corecount/price since 2010.

The truth about CPU corecount/price since 2010.

Truth to be told, you can make the argument, that the number of cores you get for a given amount of money, hasn't really changed since 2010. I'm not kidding. AMD Phenom II X6 1075T (45nm, 6 cores, 3.5 GHz, 3 MB L2 and 6 MB L3) in 2010 costed $245 MSRP, AMD Ryzen 7600X (5nm, 6 cores, 5.3 GHz, 6 MB L2 and 32 MB L3) today is $240. Price per one core of a new architecture both in 2010 and today was and is about $40. As for workstation, 6 core Xeon workstation in 2010 was $594 (W3680), while 56 core Xeon workstation today is $5889 (3495X), so again, cost per core has remained stagnant (about $99 per core both 13 years ago and today). How about the performance? Cinebench R15 score of Ryzen 5 7600X is 5.3 times higher than of Phenom II X6 1075T, because that's how much single core has become faster. This goes against the notion, that what improves is multi core and not single core. In fact, we've had the same number of cores for the same money since 2010. You could buy 4 core 2.833 GHz Intel Core2 Quad 9500 Yorkfield for $150-160 in 2010, which was $37.5-40 per core. Core i3-13100 today is $141 ($35.25 per core) and has 5.47x higher Cinebench R23 score than Core2 Quad Q9500 in 2010. So performance per core is 5.3-5.5x higher than in 2010. Of course it also depends on which examples of CPUs you choose.

Global economy doubles in product every 15-20 years. Computer performance at a constant price doubles nowadays every 4 years on average. Livestock-as-food will globally stop being a thing by ~2050 (precision fermentation and more). Human stupidity, pride and depravity are the biggest problems of our world.

a short evaluation of the last 14 years of high-end gaming GPUs

a short evaluation of the last 14 years of high-end gaming GPUs

RTX 4090 released on October 12th, 2022 has 116.6x more flops (fp32) than GTX 285 released on January 15th, 2009.

Some quick specs:

GTX 285: 1.4 billion transistors | 55nm TSMC process | 470 mm² | 3 million transistors per mm² | Tesla 2.0 architecture | MSRP 359 USD | 648 MHz core clock | 708.5 GFLOPS FP32 | 1 GB VRAM | 159 GB/s memory bandwidth | 20.74 GPixel/s | 51.84 GTexel/s | 256 KB L2 cache | 204 W TDP

RTX 4090: 76.3 billion transistors | 4nm TSMC process | 608 mm² | 125.5 million transistors per mm² | Ada Lovelace architecture | MSRP 1599 USD | 2520 MHz core clock | 82.58 TFLOPS FP32 | 24 GB VRAM | 1008 GB/s memory bandwidth | 443.5 GPixel/s | 1290 GTexel/s | 72 MB L2 cache | 450 W TDP | additional ray-tracing and tensor cores

RTX 4090 has 9.55x faster GDDR6, but 384-bit instead of 512-bit bus compared to GTX 285. 52.3x more transistors, 29.36% larger die size, 41.83x higher transistor density (according to the classic Moore's Law, it ought to be 128x higher), 3.89x higher core clock, 21.38x more GPixel/s, 24.89x more GTexel/s, 288x larger L2 cache, 2.2x higher TDP, 4.45x higher MSRP without adjusting for inflation and 3.19x higher MSRP when adjusting for inflation.

According to https://www.techpowerup.com/gpu-specs/g ... x-285.c238 relative performance comparison, RTX 4090 is on average 24x faster in games and also has 24x more GDDR memory. 24x in 14 years means 25.5% (1.255x) annual compound rate. So on average, GPUs have been becoming 25.5% stronger every year, since early 2009 (growth rate used to be higher before). 24x divided by 3.19x higher price equals 7.52x better performance and memory for the same price, compared to early 2009 (GTX 285 got cheaper by the end of 2009 and RTX 4090 might also become cheaper by the end of 2023). So the real performance increase is 4.86x smaller than the theoretical flops increase. Conclusion: don't look at flops, look at the actual performance. Gigapixels and gigatexels are a much better indicator of the actual performance, but also not always entirely accurate (different architectures, different memory bandwidths, different cache sizes, different cache speeds). As for ray-tracing and DLSS, I dislike them and don't care about them, so I won't even comment on them. Quality of RT or DLSS implementation depends on the game.

Unfortunately, I don't know what will the specs or performance be in 14 years. In the last 14 years, performance was growing by 2x every 3 years on average, but in the next 14, it might grow by 2x every 4 years on average. CMOS might be an S-curve. Providing 24x more gaming performance than RTX 4090 in a single card with a single GPU will prove to be very challenging, especially at below 2000-2500 USD. Probably 5 TB/s memory is doable, 4 GHz core clock is doable, 40K shading units is doable, 1-2 GB of cache is doable and 192 GB of VRAM is also doable at $1999-2499 and 600 W TDP in the year 2037. Some might consider this underwhelming, but I'm just writing what is possible and probable under the current paradigm and we might not see a new paradigm before the 2040s.

Some quick specs:

GTX 285: 1.4 billion transistors | 55nm TSMC process | 470 mm² | 3 million transistors per mm² | Tesla 2.0 architecture | MSRP 359 USD | 648 MHz core clock | 708.5 GFLOPS FP32 | 1 GB VRAM | 159 GB/s memory bandwidth | 20.74 GPixel/s | 51.84 GTexel/s | 256 KB L2 cache | 204 W TDP

RTX 4090: 76.3 billion transistors | 4nm TSMC process | 608 mm² | 125.5 million transistors per mm² | Ada Lovelace architecture | MSRP 1599 USD | 2520 MHz core clock | 82.58 TFLOPS FP32 | 24 GB VRAM | 1008 GB/s memory bandwidth | 443.5 GPixel/s | 1290 GTexel/s | 72 MB L2 cache | 450 W TDP | additional ray-tracing and tensor cores

RTX 4090 has 9.55x faster GDDR6, but 384-bit instead of 512-bit bus compared to GTX 285. 52.3x more transistors, 29.36% larger die size, 41.83x higher transistor density (according to the classic Moore's Law, it ought to be 128x higher), 3.89x higher core clock, 21.38x more GPixel/s, 24.89x more GTexel/s, 288x larger L2 cache, 2.2x higher TDP, 4.45x higher MSRP without adjusting for inflation and 3.19x higher MSRP when adjusting for inflation.

According to https://www.techpowerup.com/gpu-specs/g ... x-285.c238 relative performance comparison, RTX 4090 is on average 24x faster in games and also has 24x more GDDR memory. 24x in 14 years means 25.5% (1.255x) annual compound rate. So on average, GPUs have been becoming 25.5% stronger every year, since early 2009 (growth rate used to be higher before). 24x divided by 3.19x higher price equals 7.52x better performance and memory for the same price, compared to early 2009 (GTX 285 got cheaper by the end of 2009 and RTX 4090 might also become cheaper by the end of 2023). So the real performance increase is 4.86x smaller than the theoretical flops increase. Conclusion: don't look at flops, look at the actual performance. Gigapixels and gigatexels are a much better indicator of the actual performance, but also not always entirely accurate (different architectures, different memory bandwidths, different cache sizes, different cache speeds). As for ray-tracing and DLSS, I dislike them and don't care about them, so I won't even comment on them. Quality of RT or DLSS implementation depends on the game.

Unfortunately, I don't know what will the specs or performance be in 14 years. In the last 14 years, performance was growing by 2x every 3 years on average, but in the next 14, it might grow by 2x every 4 years on average. CMOS might be an S-curve. Providing 24x more gaming performance than RTX 4090 in a single card with a single GPU will prove to be very challenging, especially at below 2000-2500 USD. Probably 5 TB/s memory is doable, 4 GHz core clock is doable, 40K shading units is doable, 1-2 GB of cache is doable and 192 GB of VRAM is also doable at $1999-2499 and 600 W TDP in the year 2037. Some might consider this underwhelming, but I'm just writing what is possible and probable under the current paradigm and we might not see a new paradigm before the 2040s.

Global economy doubles in product every 15-20 years. Computer performance at a constant price doubles nowadays every 4 years on average. Livestock-as-food will globally stop being a thing by ~2050 (precision fermentation and more). Human stupidity, pride and depravity are the biggest problems of our world.

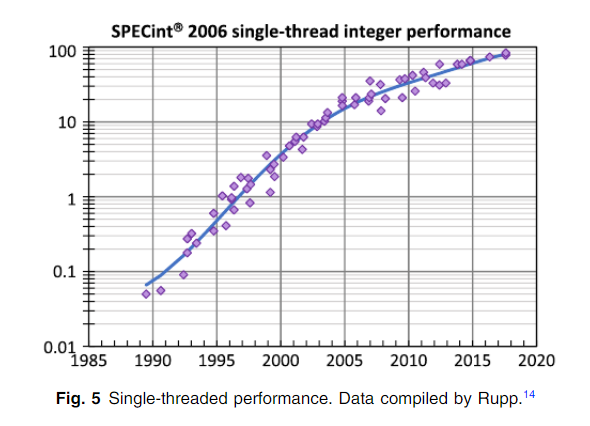

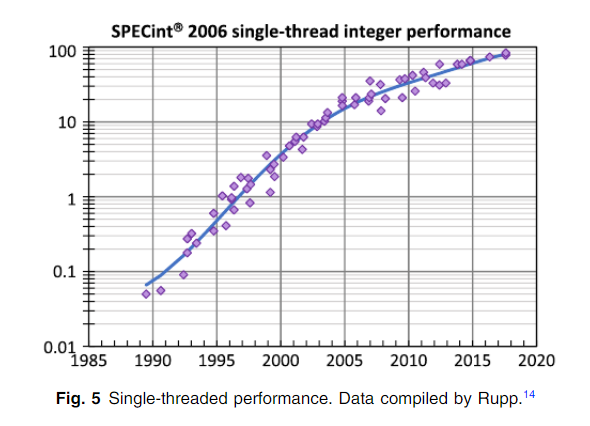

single-threaded microprocessor integer performance since 1989

Single-threaded integer performance of CPUs increased ~1600 times between the introduction of the 1.2 million transistors 25 MHz 486-DX (first CPU with [L1] cache and floating-point unit built-in) in 1989 ($1200 then, $3000 now) and the i7-8700K in 2017. Since then, it has improved by another 60%, to about 2560 times. However, i7, i9, R7 and R9 are not $3000 (32C64T 3.85 GHz AMD EPYC 9334 is 2990 USD MSRP), so it's not exactly apples to apples comparison. You can get almost all of that 2560x in a $240-260 CPU. Software often scales very well between 1 and 4 cores and threads, so 4 cores 4 threads today can result in about 10,000x faster processing than a 25 MHz 486-DX from 1989. Moving from 4 cores 4 threads to 6 cores 6 threads may result in 20% faster processing and moving to 8 cores 8 threads may result in another 10% faster processing in many cases. Increasing cores and threads further may result in 5% or even less improvement.

Ray Kurzweil back in the 90s, expected computer hardware to literally get exactly twice as fast every year. In reality, a typical program now can run 10,000 - 15,000x faster (using a CPU only) than on a cutting-edge machine in 1989 (not counting any algorithmic improvements). More than that for the most parallizable tasks (as in the case of some server workloads running on Genoa or Sapphire Rapids). 2x every year since 1989 would result in 17,180,000,000 (17 billion 180 million) times faster CPUs, which is nowhere near the actual situation this year. We are literally 1 million times slower. With a possible GPU acceleration, we can get 10-100x closer, but still far. No wonder that his other predictions have also turned out to be too optimistic on the timing. And timing is really important, as he himself said many times (and wrote about as well).

I expect the current trend to continue (the long-term trend can be divided to a 1st part until ~about the $729 Athlon 64 4000+ from Q4 2004 and the 2nd part which continues to this day). The later models of Core2 Duos about doubled Athlon 64 4000+ single-threaded performance. There are rumors that Zen 5 is going to bring another 20-40% PPC (performance per clock) improvement. Intel Lunar Lake is supposed to be much more energy efficient than Raptor Lake. The biggest problem is that usually performance doesn't scale well with corecounts exceeding four (which is why Intel was on four cores in mainstream for 10 years). You may for example get 20 frames per second in a video game with 1 core, 40 with 2 cores, 80 with 4 cores, 100 with 6 cores, 110 with 8 cores and 115 with 16 cores. Which is why AMD introduced V-cache, which boost framerates by 15% with the same corecounts. However, if you are recording and streaming at the same time, or doing 3D modelling at the same time, then a 16 core will be more useful for you. Otherwise I recommend the upcoming 7800X3D.

graph source: https://www.researchgate.net/publicatio ... ithography

Ray Kurzweil back in the 90s, expected computer hardware to literally get exactly twice as fast every year. In reality, a typical program now can run 10,000 - 15,000x faster (using a CPU only) than on a cutting-edge machine in 1989 (not counting any algorithmic improvements). More than that for the most parallizable tasks (as in the case of some server workloads running on Genoa or Sapphire Rapids). 2x every year since 1989 would result in 17,180,000,000 (17 billion 180 million) times faster CPUs, which is nowhere near the actual situation this year. We are literally 1 million times slower. With a possible GPU acceleration, we can get 10-100x closer, but still far. No wonder that his other predictions have also turned out to be too optimistic on the timing. And timing is really important, as he himself said many times (and wrote about as well).

I expect the current trend to continue (the long-term trend can be divided to a 1st part until ~about the $729 Athlon 64 4000+ from Q4 2004 and the 2nd part which continues to this day). The later models of Core2 Duos about doubled Athlon 64 4000+ single-threaded performance. There are rumors that Zen 5 is going to bring another 20-40% PPC (performance per clock) improvement. Intel Lunar Lake is supposed to be much more energy efficient than Raptor Lake. The biggest problem is that usually performance doesn't scale well with corecounts exceeding four (which is why Intel was on four cores in mainstream for 10 years). You may for example get 20 frames per second in a video game with 1 core, 40 with 2 cores, 80 with 4 cores, 100 with 6 cores, 110 with 8 cores and 115 with 16 cores. Which is why AMD introduced V-cache, which boost framerates by 15% with the same corecounts. However, if you are recording and streaming at the same time, or doing 3D modelling at the same time, then a 16 core will be more useful for you. Otherwise I recommend the upcoming 7800X3D.

graph source: https://www.researchgate.net/publicatio ... ithography

Global economy doubles in product every 15-20 years. Computer performance at a constant price doubles nowadays every 4 years on average. Livestock-as-food will globally stop being a thing by ~2050 (precision fermentation and more). Human stupidity, pride and depravity are the biggest problems of our world.

computing per $1000 1900-2075 trend evaluation

Below is a chart (from Fortune magazine) on computing per $1000 1900-2075 trend according to Ray Kurzweil's ideas, books and speeches. I don't think we are right on track. In 2023 we won't be able to buy a $1000 computer that's as fast as the human brain in my opinion. 1000 USD from 1998 is 1850 USD today, but that's still not enough money from what I see. RTX 4090 alone would cost you $1700 and that's only 83 tflops. Most scientists think that the human brain is much above that. I don't see technology doubling every year in anything, aside from some AI improvements. So no, I don't think a $1000 computer in 2045 will be as fast as 10 billion human brains, but it will be faster than a 2023 computer.

Global economy doubles in product every 15-20 years. Computer performance at a constant price doubles nowadays every 4 years on average. Livestock-as-food will globally stop being a thing by ~2050 (precision fermentation and more). Human stupidity, pride and depravity are the biggest problems of our world.

Re: GPU and CPU news and discussions

Below is a list of the most popular graphics cards according to the Steam Hardware Survey for February 2023. I'm not sure if it's reliable, but it's what we have. GTX 1650 is as fast as GTX 780 or RX 470. GTX 1060 is as fast as GTX 780 Ti or RX 480. RTX 3060 Laptop is as fast as GTX 1070 Max-Q or GTX 780M SLI (two cards connected together). So the most popular cards in 2023 are about as fast as the high-end cards from 2013 such as GTX Titan or Radeon 290X. Not great progress if you ask me, but progress nonetheless. RTX 3060 Desktop is currently gaining popularity the quickest from all, so it might be the 1st card on the survey in the coming months. The 2nd place in gaining popularity is 3060 Laptop.

This video may interest some of you:

This video may interest some of you:

Global economy doubles in product every 15-20 years. Computer performance at a constant price doubles nowadays every 4 years on average. Livestock-as-food will globally stop being a thing by ~2050 (precision fermentation and more). Human stupidity, pride and depravity are the biggest problems of our world.

Re: GPU and CPU news and discussions

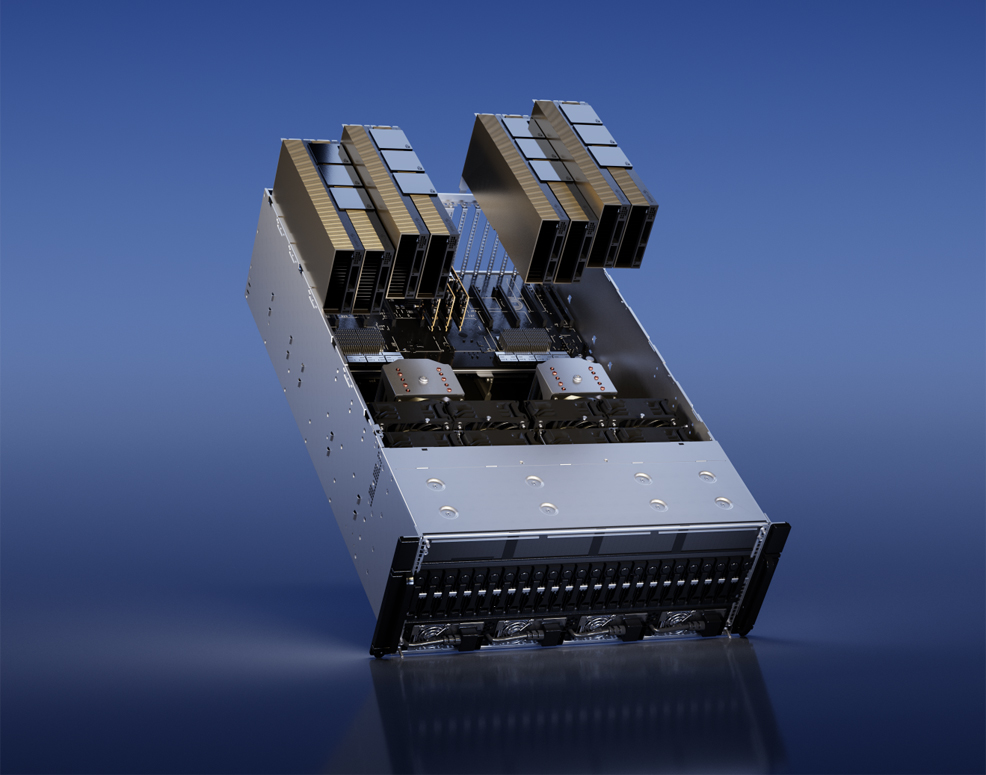

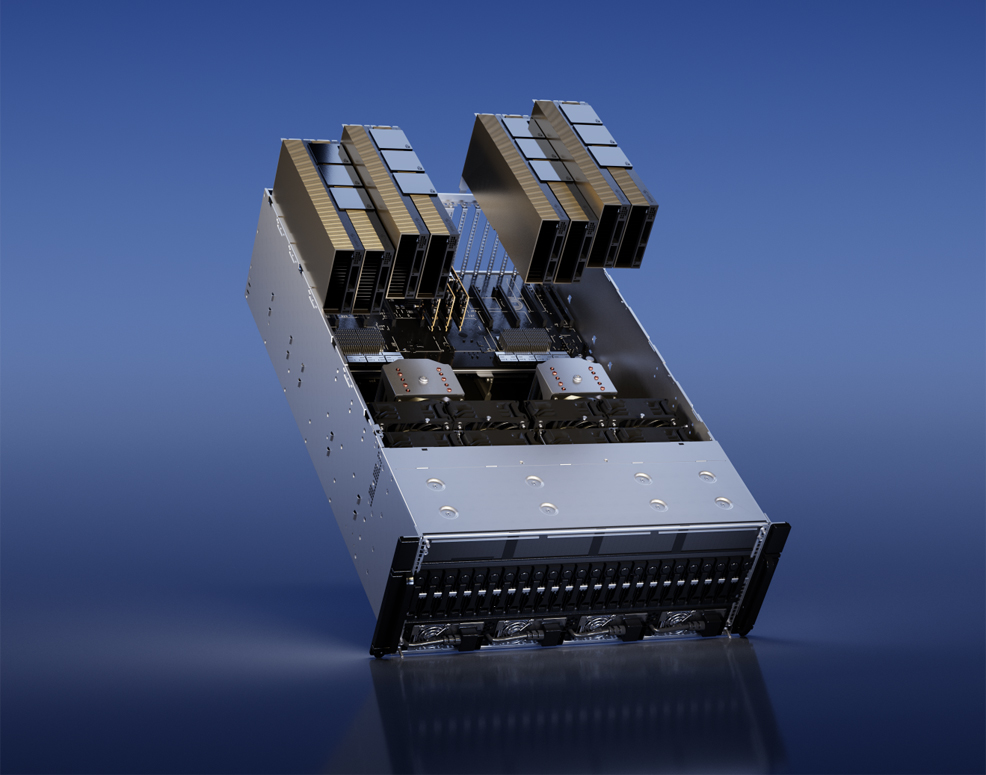

New card from Nvidia will accelerate AI

22nd March 2023

U.S. chip giant Nvidia has launched the H100 NVL, a next-generation hardware accelerator for large language models.

[...]

When measured against the older A100 (in tests using 8 x H100 NVLs vs. 8 x A100s), it shows an overall 12x gain in the inference throughput of GPT-3. This will make the H100 NVL a highly attractive option for running the next generation of large language models.

Read more: https://www.futuretimeline.net/blog/202 ... erator.htm

22nd March 2023

U.S. chip giant Nvidia has launched the H100 NVL, a next-generation hardware accelerator for large language models.

[...]

When measured against the older A100 (in tests using 8 x H100 NVLs vs. 8 x A100s), it shows an overall 12x gain in the inference throughput of GPT-3. This will make the H100 NVL a highly attractive option for running the next generation of large language models.

Read more: https://www.futuretimeline.net/blog/202 ... erator.htm

CPU progress vs GPU progress ← the truth

CPU progress vs GPU progress ← the truth

I've seen many, many times some Internet users' opinions, that allegedly, GPU progress is (much) faster than CPU progress. I also used to think that. It's not. I did some research.

The $570 i9-13900K is 30x faster than the $266 C2Q Q6600 from 2007.

The $670 Radeon RX 6950 XT is also 30x faster than the $349 GeForce 8800 GT from 2007.

You can get even faster processors by paying more in both cases.

Progress is exactly the same in both cases, believe it or not. Some people are propagating opinions that CPU progress is slower than it really is and that GPU progress is faster than it really is (for example Nvidia's CEO Jensen Huang wants you to believe that). Remember, ASICs ≠ GPUs. If something can be parallelized, CPUs can use all available threads for that, just like GPUs can use all available execution units.

The $570 i9-13900K is 30x faster than the $266 C2Q Q6600 from 2007.

The $670 Radeon RX 6950 XT is also 30x faster than the $349 GeForce 8800 GT from 2007.

You can get even faster processors by paying more in both cases.

Progress is exactly the same in both cases, believe it or not. Some people are propagating opinions that CPU progress is slower than it really is and that GPU progress is faster than it really is (for example Nvidia's CEO Jensen Huang wants you to believe that). Remember, ASICs ≠ GPUs. If something can be parallelized, CPUs can use all available threads for that, just like GPUs can use all available execution units.

Global economy doubles in product every 15-20 years. Computer performance at a constant price doubles nowadays every 4 years on average. Livestock-as-food will globally stop being a thing by ~2050 (precision fermentation and more). Human stupidity, pride and depravity are the biggest problems of our world.

Server CPU progress 2017-2023 and beyond

Server CPU progress 2017-2023 and beyond

CPU progress is not stopping or slowing down.

2022 EPYC Genoa is 6x faster (and 3x more expensive with 3x more cores) in SPECrate 2017 Integer Score (very multi-threaded) than 2017 (June 29th) EPYC Naples and 2017 Xeon Skylake-X. 2023 Xeon Sapphire Rapids is 3x faster than 2017 EPYC Naples and 2017 Xeon Skylake-SP (same price, 2x more cores).

This year, 4nm 128-core AMD EPYC will come out. In the next few years, we are probably going to see 344-core Intel Xeons and 512-core EPYCs. Up from 28 cores and 32 cores respectively in 2017. Single-threaded performance is not stopping either.

2022 EPYC Genoa is 6x faster (and 3x more expensive with 3x more cores) in SPECrate 2017 Integer Score (very multi-threaded) than 2017 (June 29th) EPYC Naples and 2017 Xeon Skylake-X. 2023 Xeon Sapphire Rapids is 3x faster than 2017 EPYC Naples and 2017 Xeon Skylake-SP (same price, 2x more cores).

This year, 4nm 128-core AMD EPYC will come out. In the next few years, we are probably going to see 344-core Intel Xeons and 512-core EPYCs. Up from 28 cores and 32 cores respectively in 2017. Single-threaded performance is not stopping either.

Global economy doubles in product every 15-20 years. Computer performance at a constant price doubles nowadays every 4 years on average. Livestock-as-food will globally stop being a thing by ~2050 (precision fermentation and more). Human stupidity, pride and depravity are the biggest problems of our world.

flops don't mean much

flops don't mean much

Here's one of the many possible examples that gigaflops don't mean much:

AMD Radeon RX 7600S - 15.77 TFLOPS : https://www.techpowerup.com/gpu-specs/r ... 600s.c4016

AMD Radeon RX 5700 XT - 9.754 TFLOPS : https://www.techpowerup.com/gpu-specs/r ... 0-xt.c3339

5700 XT is 34% faster than 7600S, despite 7600S having 61.7% more gigaflops

or 7600S is 25.8% slower than 5700 XT, despite it having 38.2% less gigaflops

similar situation with Nvidia GeForce

they want you to believe, that their cards are faster than they really are

5nm AMD EPYC Genoa 9654 has 96 cores, each core is able to do AVX-512 & FMA (512-bit) instructions (new with Zen 4), which means theoretically 64 (fp32) gigaflops per core @ 1 GHz (64 floating-point operations per clock cycle per core). With all 96 cores fully active and utilized, the $11,805 360W EPYC 9654 runs @ 3.55 GHz. So 96 × 3.55 × 64 = 21.81 (fp32) TFLOPS, about as much as Radeon 6900 XT or RTX 3070 Ti. It can also theoretically do 10.9 fp64 TFLOPS. But again, FLOPS don't mean much.

AMD Radeon RX 7600S - 15.77 TFLOPS : https://www.techpowerup.com/gpu-specs/r ... 600s.c4016

AMD Radeon RX 5700 XT - 9.754 TFLOPS : https://www.techpowerup.com/gpu-specs/r ... 0-xt.c3339

5700 XT is 34% faster than 7600S, despite 7600S having 61.7% more gigaflops

or 7600S is 25.8% slower than 5700 XT, despite it having 38.2% less gigaflops

similar situation with Nvidia GeForce

they want you to believe, that their cards are faster than they really are

5nm AMD EPYC Genoa 9654 has 96 cores, each core is able to do AVX-512 & FMA (512-bit) instructions (new with Zen 4), which means theoretically 64 (fp32) gigaflops per core @ 1 GHz (64 floating-point operations per clock cycle per core). With all 96 cores fully active and utilized, the $11,805 360W EPYC 9654 runs @ 3.55 GHz. So 96 × 3.55 × 64 = 21.81 (fp32) TFLOPS, about as much as Radeon 6900 XT or RTX 3070 Ti. It can also theoretically do 10.9 fp64 TFLOPS. But again, FLOPS don't mean much.

Global economy doubles in product every 15-20 years. Computer performance at a constant price doubles nowadays every 4 years on average. Livestock-as-food will globally stop being a thing by ~2050 (precision fermentation and more). Human stupidity, pride and depravity are the biggest problems of our world.