• 21% faster general-purpose performance

• 42% faster AI inference performance

• 36% better energy efficiency (perf per watt)

• up to 3x more cache (only in the higher models)

• up to 16.7% higher memory bandwidth (depending on the model, from DDR-4400 to DDR5-5600)

• support for new AMX instructions (intended for AI workloads)

• support for CXL Type 3 memory devices

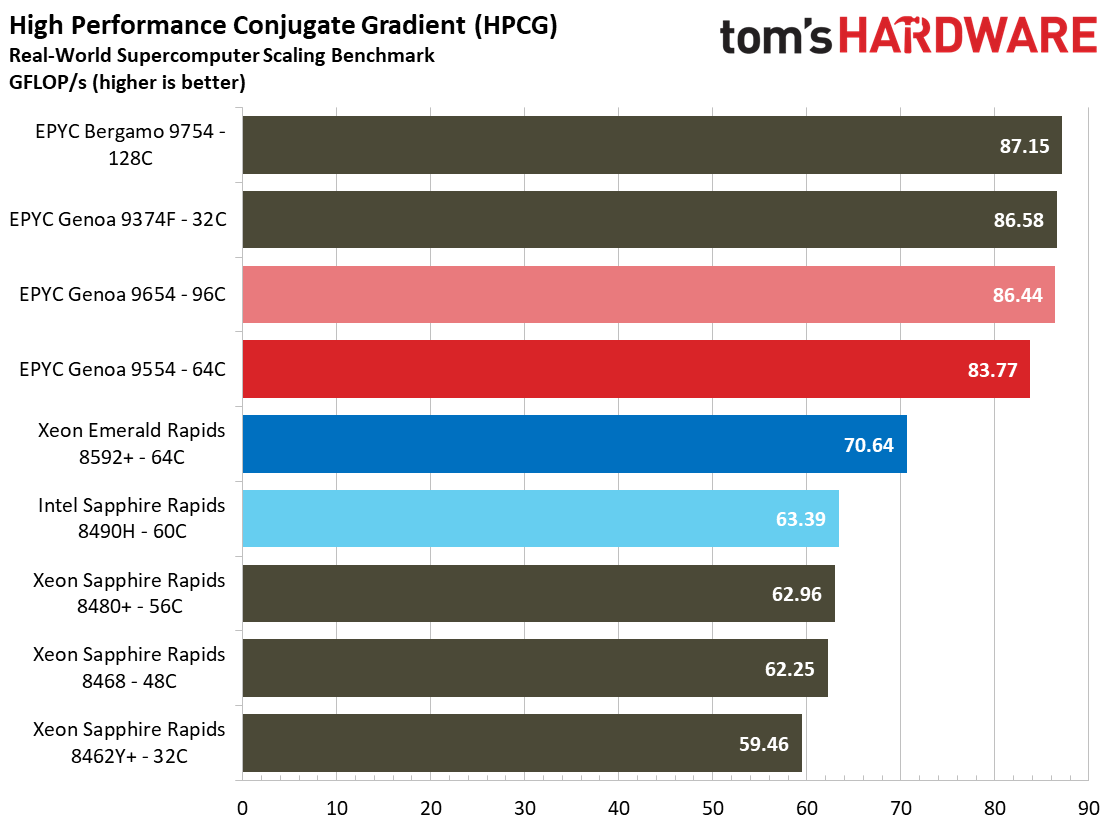

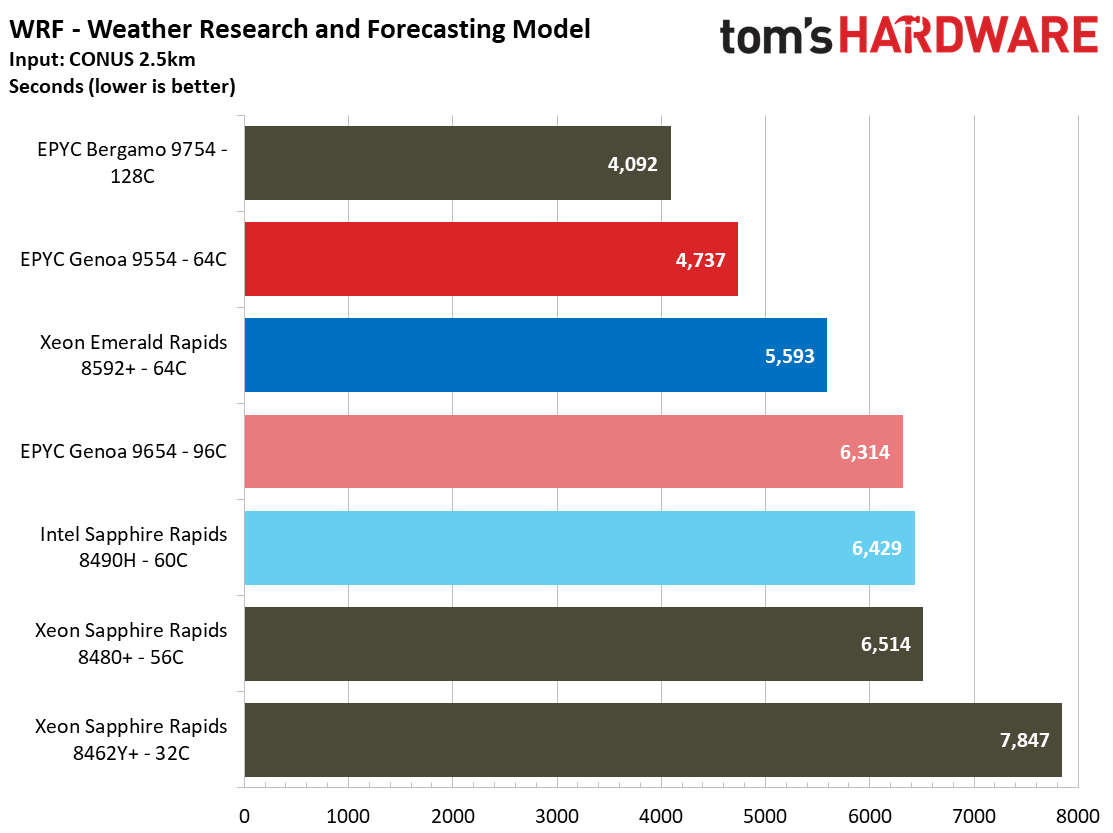

Here are three example performance comparisons made by tom's HARDWARE's Paul Alcorn:

The EPYC 9654 takes the lead in supercomputer test suites, though the core-dense Zen 4C Bergamo 9754 tops all the charts. The 8592+ shows some improvements over the previous generation 8490H, particularly in the Graph 500 test, but on a core-for-core basis, AMD still leads Intel.

The Weather Research and Forecasting model (WRF) is memory bandwidth sensitive, so it's no surprise to see the Genoa 9554 with its 12-channel memory controller outstripping the eight-channel Emerald Rapids 8592+. Again, the 9654's lackluster performance here is probably at least partially due to lower per-core memory throughput. There is a similar pattern with the NWChem computational chemistry workload, though the 9654 is more impressive in this benchmark.

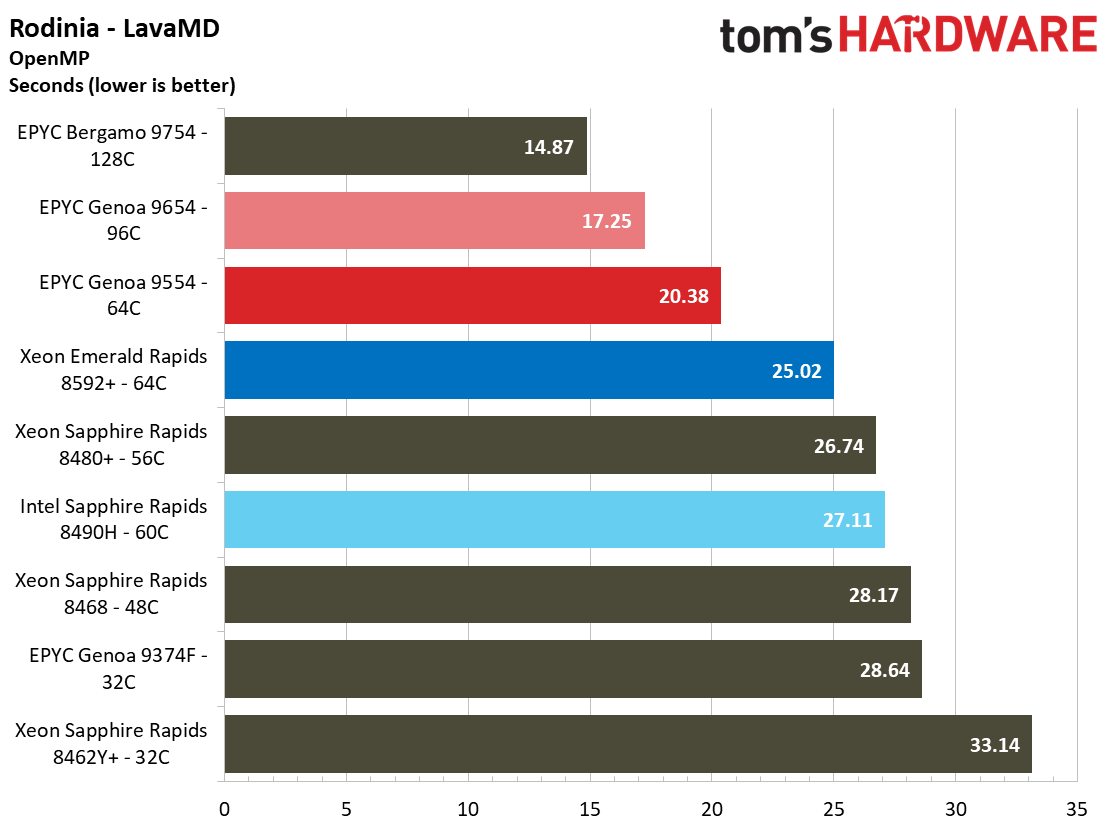

The 8592+ is impressive in the SciMark benchmarks, but falls behind in the Rodinia LavaMD test (Rodinia suite is focused on accelerating compute-intensive applications with accelerators). It makes up for that shortcoming with the Rodinia HotSpot3D and CFD Solver benchmarks, with the latter showing a large delta between the 8592+ and competing processors. The 64-core Genoa 9554 fires back in the OpenFOAM CFD (free, open source software for computational fluid dynamics) workloads, though.

source: tom's HARDWARE early review of the new Intel Xeon Emerald Rapids

I hope for (Granite Rapids?) soon upcoming Intel many-core CPUs to be at least twice more performant in general-purpose and at least twice more energy efficient than Emerald Rapids, without rising prices even by a dollar. Perhaps even managing to improve AI performance by another 10x. Would be really nice for that to happen soon.