Fully autonomous weapons, also known as "killer robots," would be able to select and engage targets without meaningful human control. Precursors to these weapons, such as armed drones, are being developed and deployed by nations including China, Israel, South Korea, Russia, the United Kingdom and the United States. There are serious doubts that fully autonomous weapons would be capable of meeting international humanitarian law standards, including the rules of distinction, proportionality, and military necessity, while they would threaten the fundamental right to life and principle of human dignity. Human Rights Watch calls for a preemptive ban on the development, production, and use of fully autonomous weapons. Human Rights Watch is a founding member and serves as global coordinator of the Campaign to Stop Killer Robots.

Drones may have attacked humans fully autonomously for the first time

Military drones may have autonomously attacked humans for the first time ever last year, according to a United Nations report. While the full details of the incident, which took place in Libya, haven’t been released and it is unclear if there were any casualties, the event suggests that international efforts to ban lethal autonomous weapons before they are used may already be too late.

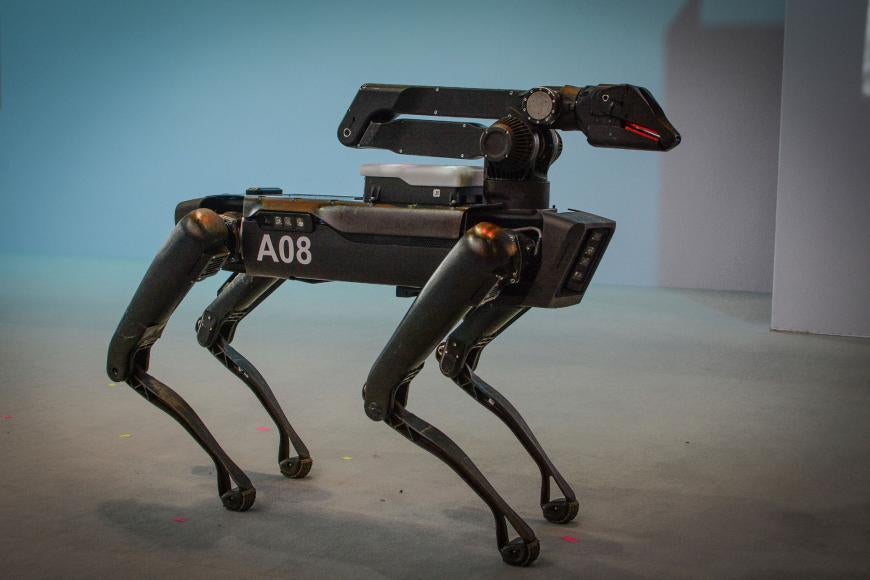

The robot in question is a Kargu-2 quadcopter produced by STM.

Was a flying killer robot used in Libya? Quite possibly

We may be living in a brave new world right now.Last year in Libya, a Turkish-made autonomous weapon—the STM Kargu-2 drone—may have “hunted down and remotely engaged” retreating soldiers loyal to the Libyan General Khalifa Haftar, according to a recent report by the UN Panel of Experts on Libya. Over the course of the year, the UN-recognized Government of National Accord pushed the general’s forces back from the capital Tripoli, signaling that it had gained the upper hand in the Libyan conflict, but the Kargu-2 signifies something perhaps even more globally significant: a new chapter in autonomous weapons, one in which they are used to fight and kill human beings based on artificial intelligence.

The Kargu is a “loitering” drone that can use machine learning-based object classification to select and engage targets, with swarming capabilities in development to allow 20 drones to work together. The UN report calls the Kargu-2 a lethal autonomous weapon. It’s maker, STM, touts the weapon’s “anti-personnel” capabilities in a grim video showing a Kargu model in a steep dive toward a target in the middle of a group of manikins. (If anyone was killed in an autonomous attack, it would likely represent an historic first known case of artificial intelligence-based autonomous weapons being used to kill. The UN report heavily implies they were, noting that lethal autonomous weapons systems contributed to significant casualties of the manned Pantsir S-1 surface-to-air missile system, but is not explicit on the matter.)

Many people, including Steven Hawking and Elon Musk, have said they want to ban these sorts of weapons, saying they can’t distinguish between civilians and soldiers, while others say they’ll be critical in countering fast-paced threats like drone swarms and may actually reduce the risk to civilians because they will make fewer mistakes than human-guided weapons systems. Governments at the United Nations are debating whether new restrictions on combat use of autonomous weapons are needed. What the global community hasn’t done adequately, however, is develop a common risk picture. Weighing risk vs. benefit trade-offs will turn on personal, organizational, and national values, but determining where risk lies should be objective.

It’s just a matter of statistics.