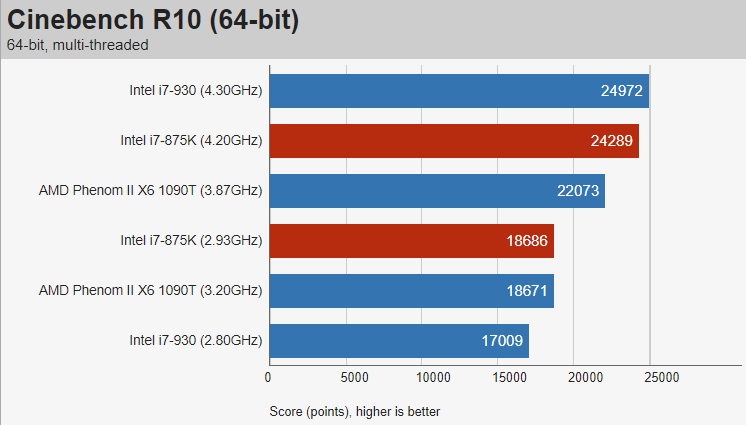

Then in July, the 45nm Lynnfield i7-875K was only 9.86% faster than the 45nm Bloomfield i7-930 for $342 MSRP, so a whooping 53 USD more (+18.33%), just 4 months later. All reviewers wrote it was too expensive for a four-core CPU and recommended AMD instead.

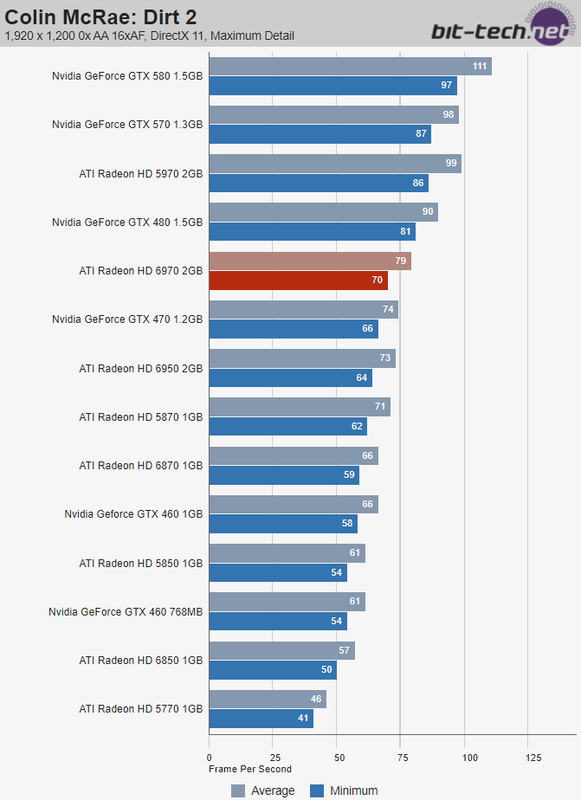

Radeon HD 6970 came out in December. Was only 11.3% faster than HD 5870 from September 2009, while being 33% more power hungry and 25% more expensive. It was a terrible deal and the first time in the history of graphics cards, when 15 months resulted in such a terribly small improvement (really a decrease in value proposition).

I should had understood then, that Ray Kurzweil and the likes, were wrong. But I didn't. I thought it was only a temporary situation and things would get much, much, much better in the coming years. It took me many years to understand my mistake, my gullibility and my inexperience.