GPU and CPU news and discussions

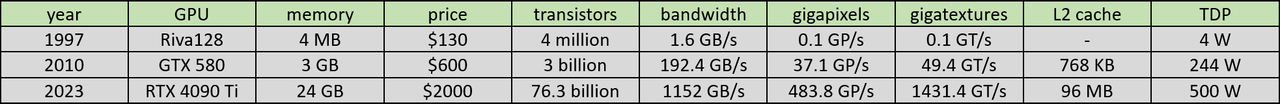

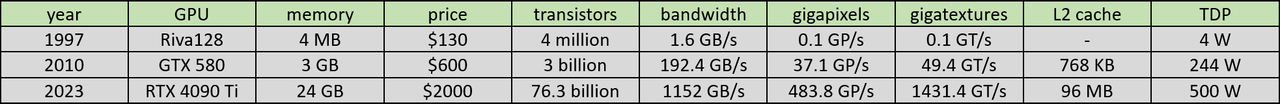

Nvidia GPU comparison 1997, 2010 and 2023

Nvidia GPU comparison 1997, 2010 and 2023

I made a simple comparison table of the top of the line single processor Nvidia graphics cards in 1997, 2010 and 2023, so that there is a 13 years time span between them. You can see for yourself, how they have changed through the years. Changes since 1997 were huge, but they were more substantial in the first 13 years. Power requirements, die sizes and transistor counts have increased enormously between 1997 and 2010. Of course, the number of transistors in the table doesn't take VRAM transistors into account, only the GPU die itself. Almost 1000x transistors and memory increase in the first 13 years, amazing. The price has also increased - by 4.6x in the first 13 years and 3.3x in the latter 13 years, 15.4x in total. 4090 and 4090 Ti have 8x more VRAM than the 580. The 580 is about 1200x faster (possibly, I think, or maybe more, perhaps 3000x by some measures) than Riva 128, for 60x more electricity and heat. So the efficiency improvement (real performance/watt) is about 20x. The first GTX Titan in 2013 was $1000, 6 GB VRAM and about 87% faster than the 580. The GTX 980 in 2014 was $550, 4 GB VRAM and about 2x faster than the 580. In 2016, Titan Pascal was 4x faster with 4x more VRAM for $1200, so 2x higher price than the 580. 4090 Ti will be about 15x faster than GTX 580 for 2x more energy, so the efficiency improvement is about 7.5x, still very significant. 20x vs 7.5x (150x in total in 26 years). Things have slowed down after 2010. Change is more gradual and moderate than it used to be for some time in this space. Limiting factors are die size, heat output, electricity requirement, tunneling effects and new nodes design & manufacturing costs.

Last edited by Tadasuke on Sat Apr 15, 2023 2:08 pm, edited 1 time in total.

Global economy doubles in product every 15-20 years. Computer performance at a constant price doubles nowadays every 4 years on average. Livestock-as-food will globally stop being a thing by ~2050 (precision fermentation and more). Human stupidity, pride and depravity are the biggest problems of our world.

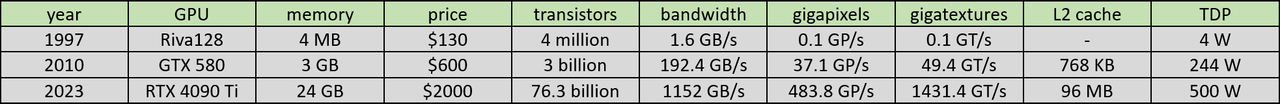

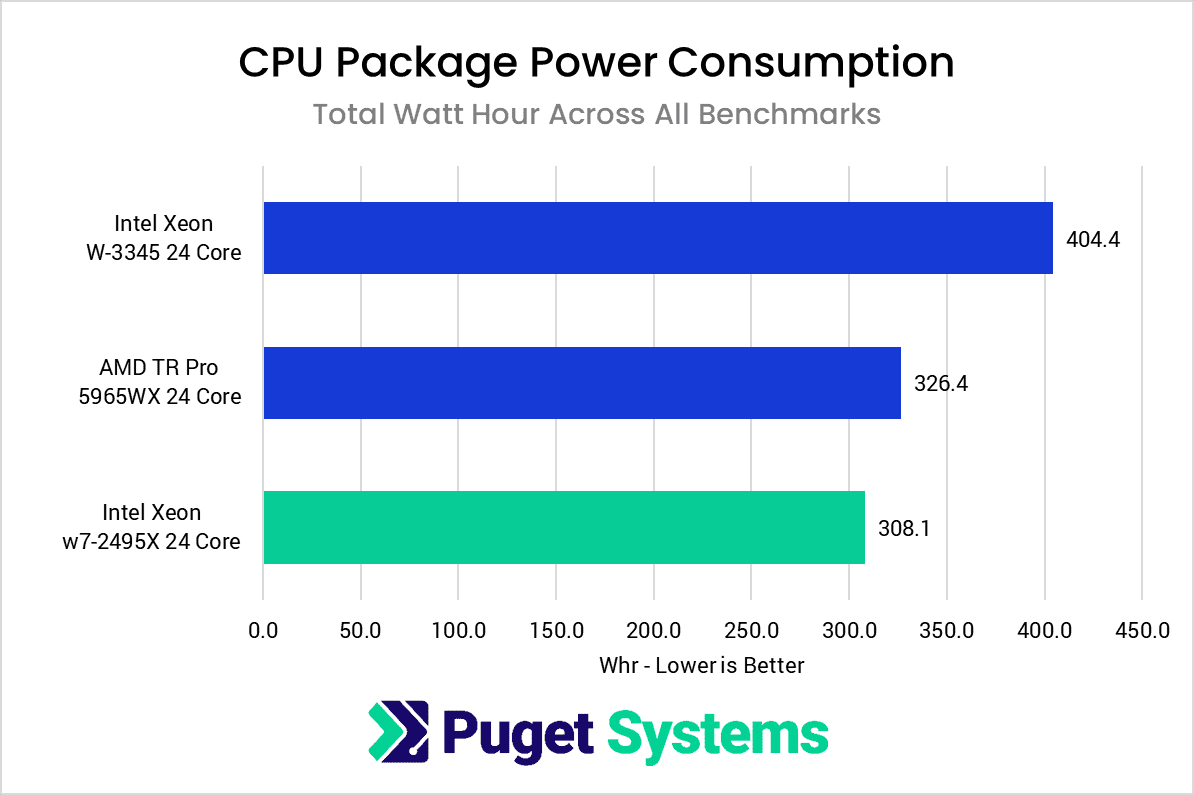

24-core Golden Cove vs 24-core Zen 3 workstation

24-core Golden Cove vs 24-core Zen 3 workstation

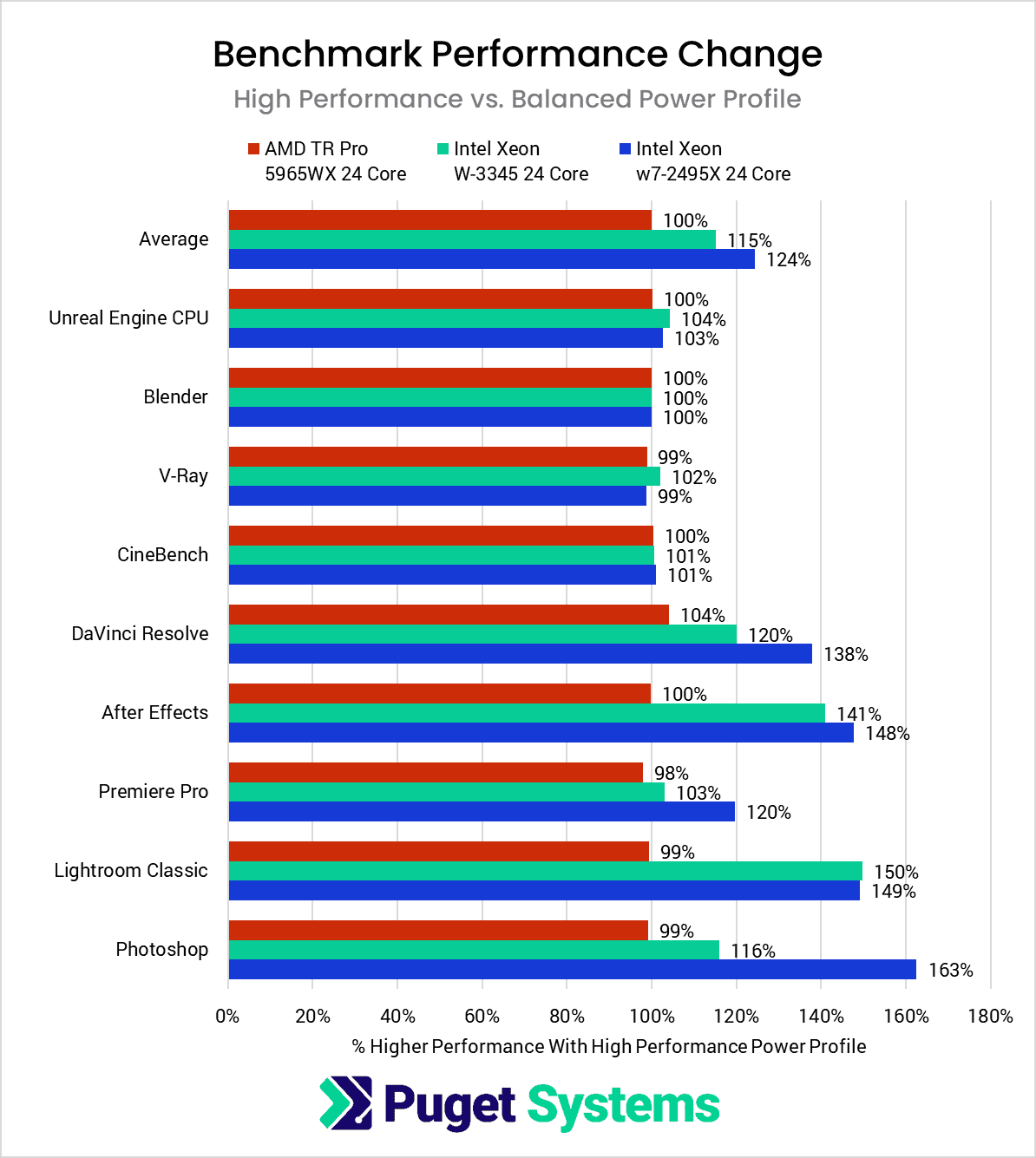

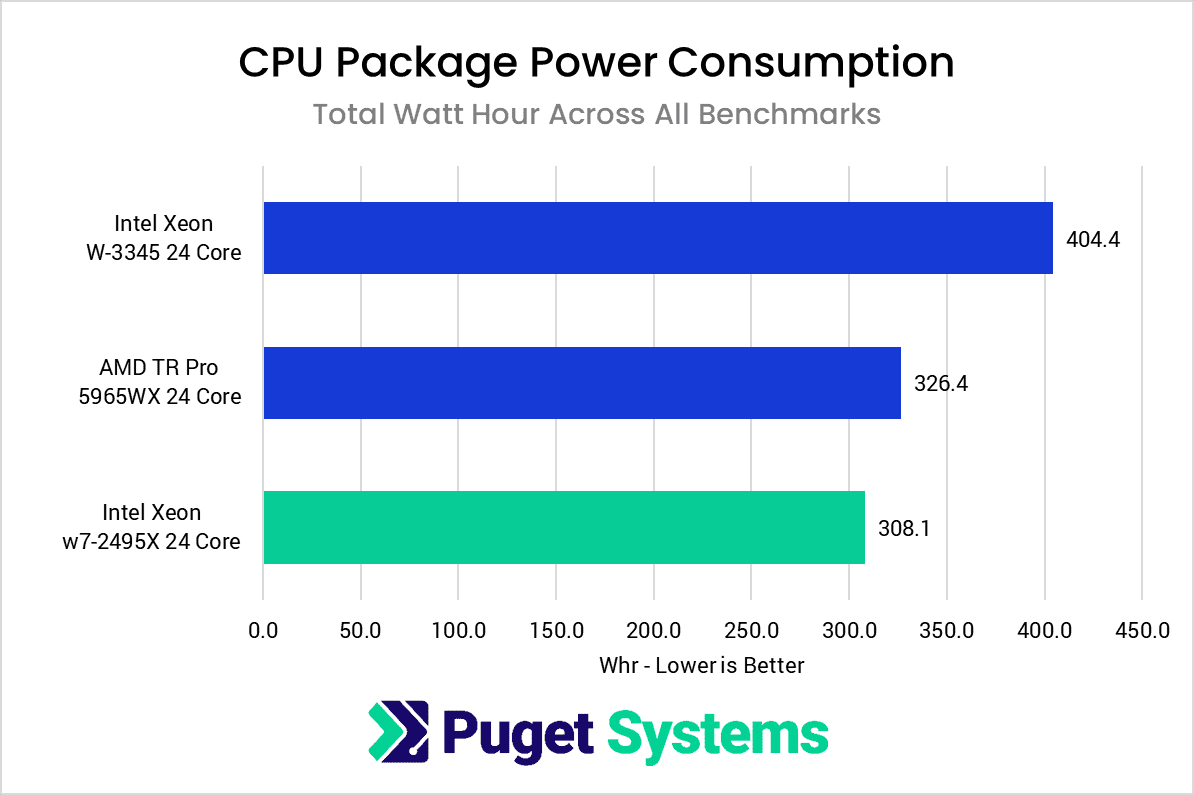

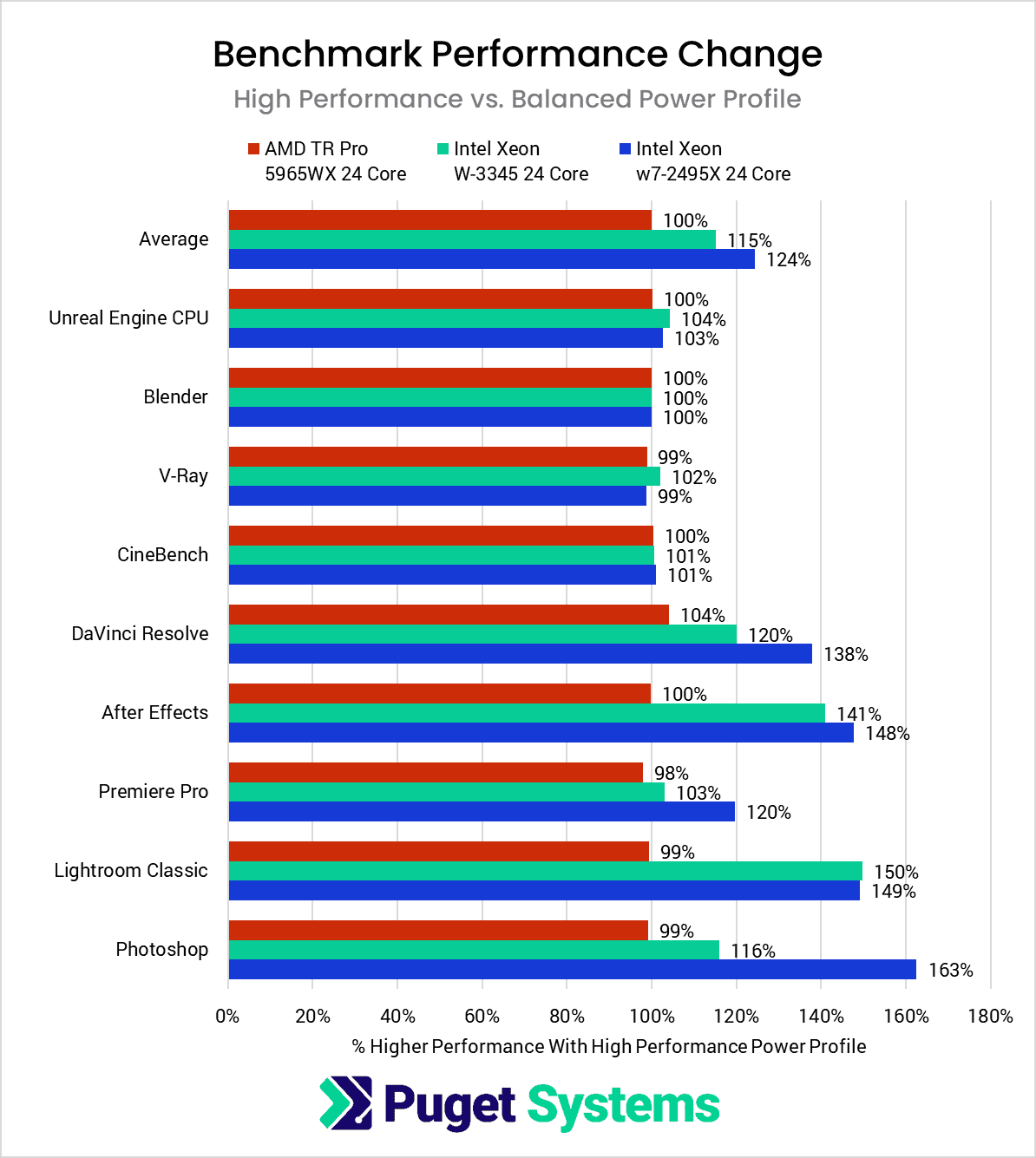

Sapphire Rapids looks to be more energy efficient than Zen 3 after all:

And faster as well:

source: https://www.pugetsystems.com/labs/artic ... on-review/

And faster as well:

source: https://www.pugetsystems.com/labs/artic ... on-review/

Global economy doubles in product every 15-20 years. Computer performance at a constant price doubles nowadays every 4 years on average. Livestock-as-food will globally stop being a thing by ~2050 (precision fermentation and more). Human stupidity, pride and depravity are the biggest problems of our world.

comparing some Nvidia graphics cards from Riva 128

comparing some Nvidia graphics cards from Riva 128

Between the 1997 Riva 128 and the 1998 Riva TNT, performance doubled and memory doubled as well. Same with Riva TNT and 1999 Riva TNT2. And the same with GeForce 256 DDR compared to Riva TNT2. In Quake III at 800x600, you would get ~24 fps with Riva 128, ~48 fps with Riva TNT, ~96 fps with Riva TNT2 and ~192 fps with GeForce 256 DDR (~164 with SDR). Memory bandwidth rose by 3x between Riva 128 and 256 DDR (pixel and texture fill rate by ~5x). GeForce2 Ultra doubled performance again, but with $499 (!) MSRP. GeForce 6800 Ultra Extreme in 2004 was the first card to reach ~100 gigaflops. 1 year later, the 7800 GTX reached ~200 gigaflops and the 8800 GTX Ultra in 2007 ~400 gigaflops. GPU performance (and even memory) used to be doubling about every year indeed for some time, that was true, but it stopped being the case. Even when it was doubling, it was happening with the expense of power and cost (even size as well). Then GeForce 285 in 2009 was ~700 gigaflops and GeForce 580 in 2010 ~1600 gigaflops, about as much as the 8th gen consoles (the PS4 is about as fast as the 580). Xbox Series S (the new gaming baseline when excluding Switch) is ~4x faster than Xbox One and ~3x faster than PS4 in GPU performance (~5x in CPU) and GTX 580.

Global economy doubles in product every 15-20 years. Computer performance at a constant price doubles nowadays every 4 years on average. Livestock-as-food will globally stop being a thing by ~2050 (precision fermentation and more). Human stupidity, pride and depravity are the biggest problems of our world.

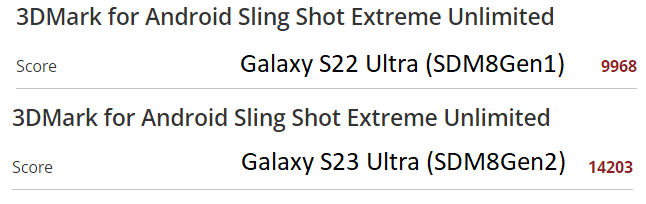

Samsung Galaxy S22 Ultra vs S23 Ultra 3DMark comparison

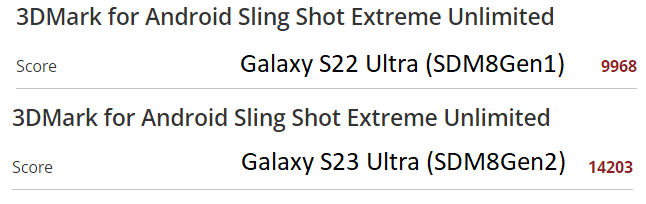

Here's a comparison of a 3DMark for Android Sling Shot Extreme Unlimited score between these two:

As you can see, the S23 is 42.5% faster than the S22 after 1 year. This is in-line with my 10 to 49.9% once per year evaluation and prediction.

They won't get exactly 42.5% faster every year, but improvements won't be far from that.

As you can see, the S23 is 42.5% faster than the S22 after 1 year. This is in-line with my 10 to 49.9% once per year evaluation and prediction.

They won't get exactly 42.5% faster every year, but improvements won't be far from that.

Global economy doubles in product every 15-20 years. Computer performance at a constant price doubles nowadays every 4 years on average. Livestock-as-food will globally stop being a thing by ~2050 (precision fermentation and more). Human stupidity, pride and depravity are the biggest problems of our world.

-

Nanotechandmorefuture

- Posts: 478

- Joined: Fri Sep 17, 2021 6:15 pm

- Location: At the moment Miami, FL

Re: GPU and CPU news and discussions

Its so nice those high quality NVIDIA GPUs can so much with little effort especially when bought all together with a nice setup.

AMD 2010-2022 mid-range CPU comparison

Here's the comparison, all three are hex core AMD CPUs:

Phenom II X6 1055T | 2010 | $199 | 346 mm² | K10 | DDR3-1333 | 2.8 GHz | 3.3 GHz | CB R15 score 446

Ryzen 5 1600X | 2017 | $249 | 213 mm² | Zen 1 | DDR4-2666 | 3.6 GHz | 4.0 GHz | CB R15 score 1126 (2.52x more)

Ryzen 5 7600X | 2022 | $299 | 193 mm² | Zen 4 | DDR5-5200 | 4.7 GHz | 5.3 GHz | CB R15 score 2415 (2.14x more)

I think there is a trend here that you can see. Prices are going up mostly because of inflation ($199 from 2010 is $275 today), die sizes are becoming smaller, frequencies are going up, memory speeds are going up and benchmark scores are going up as well. Of course, Cinebench R15 isn't the only benchmark out there, but I'm trying to simplify things. It's one of the more standard benchmarks. So 7600X looks to be about 5.4x faster than Phenom II X6 1055T, which had the same number of cores 13 years ago. Things aren't stagnant, but they aren't evolving very quickly.

In 2010, PC enthusiasts regarded Pentium E6600 or any Core2Duo as weak, Phenom II X6 was mid-range and pretty good, while i7-980 was the high-end.

Phenom II X6 1055T | 2010 | $199 | 346 mm² | K10 | DDR3-1333 | 2.8 GHz | 3.3 GHz | CB R15 score 446

Ryzen 5 1600X | 2017 | $249 | 213 mm² | Zen 1 | DDR4-2666 | 3.6 GHz | 4.0 GHz | CB R15 score 1126 (2.52x more)

Ryzen 5 7600X | 2022 | $299 | 193 mm² | Zen 4 | DDR5-5200 | 4.7 GHz | 5.3 GHz | CB R15 score 2415 (2.14x more)

I think there is a trend here that you can see. Prices are going up mostly because of inflation ($199 from 2010 is $275 today), die sizes are becoming smaller, frequencies are going up, memory speeds are going up and benchmark scores are going up as well. Of course, Cinebench R15 isn't the only benchmark out there, but I'm trying to simplify things. It's one of the more standard benchmarks. So 7600X looks to be about 5.4x faster than Phenom II X6 1055T, which had the same number of cores 13 years ago. Things aren't stagnant, but they aren't evolving very quickly.

In 2010, PC enthusiasts regarded Pentium E6600 or any Core2Duo as weak, Phenom II X6 was mid-range and pretty good, while i7-980 was the high-end.

Global economy doubles in product every 15-20 years. Computer performance at a constant price doubles nowadays every 4 years on average. Livestock-as-food will globally stop being a thing by ~2050 (precision fermentation and more). Human stupidity, pride and depravity are the biggest problems of our world.

AMD Ryzen 8000 "Granite Ridge" Zen 5 Processor to Max Out at 16 Cores

AMD Ryzen 8000 "Granite Ridge" Zen 5 Processor to Max Out at 16 Cores

source: https://www.techpowerup.com/308614/amd- ... t-16-coresAMD's next-generation Ryzen 8000 "Granite Ridge" desktop processor based on the "Zen 5" microarchitecture, will continue to top out at 16-core/32-thread as the maximum CPU core-count possible, says a report by PC Games Hardware. The processor will retain the chiplet design of the current Ryzen 7000 "Raphael" processor, with two 8-core "Zen 5" CCDs, and one I/O die. It's very likely that AMD will reuse the same 6 nm client I/O die (cIOD) as "Raphael," just the way it used the same 12 nm cIOD between Ryzen 3000 "Matisse" and Ryzen 5000 "Vermeer;" but with updates that could enable higher DDR5 memory speeds. Each of the up to two "Eldora" Zen 5 CCDs has 8 CPU cores, with 1 MB of dedicated L2 cache per core, and 32 MB of shared L3 cache. The CCDs are very likely to be built on the TSMC 3 nm EUV silicon fabrication process.

Perhaps the most interesting aspect of the PCGH leak would have to be the TDP numbers being mentioned, which continue to show higher-performance SKUs with 170 W TDP, and lower tiers with 65 W TDP. With its CPU core-counts not seeing increases, AMD would bank on not just the generational IPC increase of its "Zen 5" cores, but also max out performance within the power envelope of the new node, by dialing up clock speeds. AMD could ride out 2023 with its Ryzen 7000 "Zen 4" processors on the desktop platform, with "Granite Ridge" slated to enter production only by Q1-2024. The company could update its product stack in the meantime, perhaps even bring the 4 nm "Phoenix" monolithic APU silicon to the Socket AM5 desktop platform. Ryzen 8000 is expected to retain full compatibility with existing Socket AM5, and AMD 600-series chipset motherboards.

Global economy doubles in product every 15-20 years. Computer performance at a constant price doubles nowadays every 4 years on average. Livestock-as-food will globally stop being a thing by ~2050 (precision fermentation and more). Human stupidity, pride and depravity are the biggest problems of our world.

-

weatheriscool

- Posts: 13910

- Joined: Sun May 16, 2021 6:16 pm

Re: GPU and CPU news and discussions

AMD CTO Confirms It'll Use Hybrid Designs in Future CPUs

The company is looking at changing the types of cores it uses in future CPUs instead of just increasing the number of them.

By Josh Norem May 19, 2023

https://www.extremetech.com/computing/a ... uture-cpus

The company is looking at changing the types of cores it uses in future CPUs instead of just increasing the number of them.

By Josh Norem May 19, 2023

https://www.extremetech.com/computing/a ... uture-cpus

Intel and AMD have taken very different paths to arrive at almost the same spot in the CPU hierarchy. Intel famously adopted a hybrid big.LITTLE core architecture for its Alder and Raptor Lake CPUs, while AMD has stuck to its guns with a standard Zen 3/4 core design so far. That's paid off for both companies as they are neck-and-neck in the outright performance war, but AMD can no longer afford to ignore the benefits of a hybrid design. In a recent interview, its CTO explicitly stated that the company would move to a hybrid architecture for client CPUs, albeit at some undetermined point in the future.

AMD's plans were revealed by Mark Papermaster, the company's CTO since 2011. He was speaking to Tom's Hardware on the sidelines of a tech conference in Belgium. Mr. Papermaster was asked about AMD's history of sticking with 16 cores at the top of its stack and whether the company still sees that as the "sweet spot" for client CPUs. The CTO began by stating that AMD has already started deviating from tradition by using dedicated AI hardware in its current Ryzen 7040 mobile APUs and that this trend of specialized hardware will continue in the future, as he segued to discuss hybrid architectures.

-

weatheriscool

- Posts: 13910

- Joined: Sun May 16, 2021 6:16 pm

Re: GPU and CPU news and discussions

Samsung To Officially Unveil Its 3nm, 4nm Technologies In June, With Up To 34 Percent Power Efficiency Improvements

Omar Sohail

Omar Sohail

https://wccftech.com/samsung-unveiling- ... s-in-june/In an attempt to compete with TSMC while also making efforts to take its foundry business to new heights, Samsung is all set to unveil its 3nm and 4nm technologies at the VLSI Symposium 2023 in June. Coming to the specifics, the Korean giant will showcase the benefits of its SF3 and SF4X processes, and we will provide their advantages here.

Samsung is not making a direct comparison to its first-generation 3nm process but claims that its SF3 node is 22 percent faster than SF4 (4nm LPP)

The SF3 process will utilize Samsung’s 3nm GAP technology and will rely on GAA, or Gate All Around transistor, which the manufacturer refers to as MBCFETs, or Multi-Bridge-Channel Field-Effect Transistors. This approach is said to bring further improvements to SF3, but strange enough, Samsung has not directly compared this technology to its first-generation 3nm one, suggesting that the difference may not be much.

-

weatheriscool

- Posts: 13910

- Joined: Sun May 16, 2021 6:16 pm

Re: GPU and CPU news and discussions

Intel Announces First CPU Rebrand in 15 Years for Meteor Lake

The company is resetting the dial on its CPU naming scheme and starting over with the number 1.

By Josh Norem June 15, 2023

The company is resetting the dial on its CPU naming scheme and starting over with the number 1.

By Josh Norem June 15, 2023

https://www.extremetech.com/computing/i ... eteor-lakeLast month, Intel confirmed it was planning to change its CPUs' branding when its upcoming 14th Generation Meteor Lake platform launches later this year. Now the company has made it official, and it's big news as it's the first change to its branding since it adopted the Intel Core brand in 2006. It later added the "i" to the brand in 2008, and now it's starting almost from scratch for its next launch and into the future.

In the future, it'll be segmenting its client CPUs into either mainstream or performance categories, denoted by the word "Ultra" in the brand or the lack of it. The numbering will stay the same as far as 3/5/7/9 goes but without the "i" involved. The generational handle will still be there too, but not at 14 like we expected, starting over with the number one. That means we'll see names like Intel Core Ultra 9 1009K instead of 14900K, or something along those lines.