5th April 2018

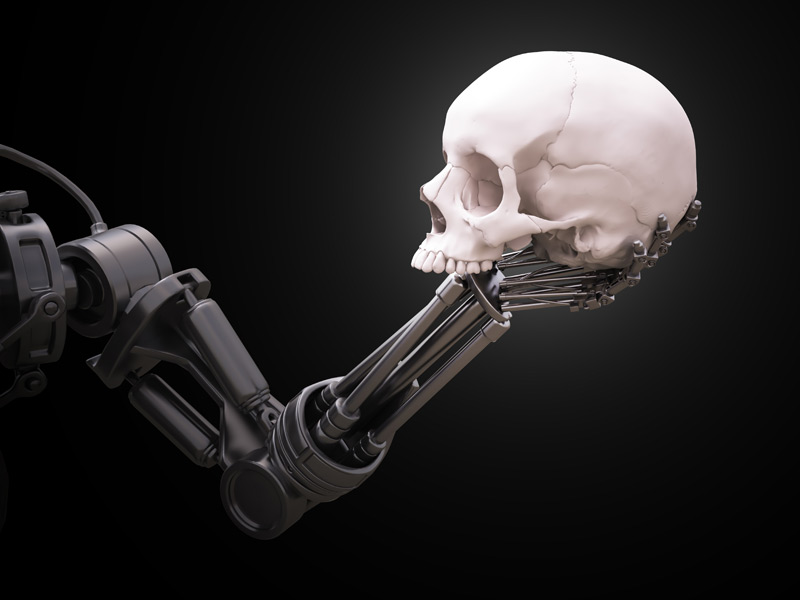

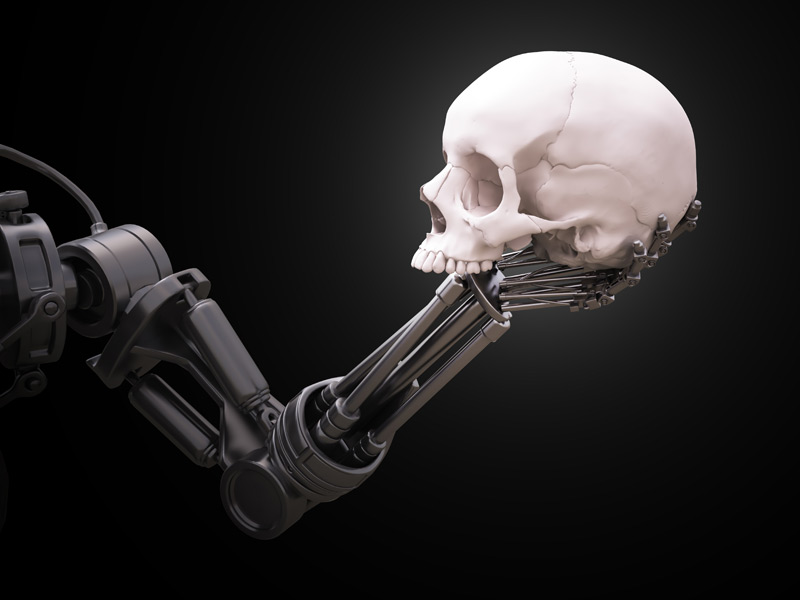

Experts call for boycott of South Korean university developing AI weapons

Artificial intelligence experts from 30 countries are boycotting a South Korean university over concerns that a new lab in partnership with a leading defence company could lead to autonomous weapons lacking human control.

The Korea Advanced Institute of Science and Technology (KAIST) is a public research university in Daejeon, South Korea. It is collaborating with defence manufacturer Hanwha Systems, which makes cluster munitions that are banned in 120 countries. Together, KAIST and Hanwha Systems plan to develop "AI-based command and decision systems, composite navigation algorithms for mega-scale unmanned undersea vehicles, AI-based smart aircraft training systems, and AI-based smart object tracking and recognition technology."

In their letter, signed by 50 researchers, the organisers of the boycott state:

As researchers and engineers working on artificial intelligence and robotics, we are greatly concerned by the opening of a "Research Center for the Convergence of National Defense and Artificial Intelligence" at KAIST in collaboration with Hanwha Systems, South Korea's leading arms company. It has been reported that the goals of this Center are to "develop artificial intelligence (AI) technologies to be applied to military weapons, joining the global competition to develop autonomous arms."

At a time when the United Nations is discussing how to contain the threat posed to international security by autonomous weapons, it is regrettable that a prestigious institution like KAIST looks to accelerate the arms race to develop such weapons. We therefore publicly declare that we will boycott all collaborations with any part of KAIST until such time as the President of KAIST provides assurances, which we have sought but not received, that the Center will not develop autonomous weapons lacking meaningful human control. We will, for example, not visit KAIST, host visitors from KAIST, or contribute to any research project involving KAIST.

If developed, autonomous weapons will be the third revolution in warfare. They will permit war to be fought faster and at a scale greater than ever before. They have the potential to be weapons of terror. Despots and terrorists could use them against innocent populations, removing any ethical restraints. This Pandora's box will be hard to close if it is opened. As with other technologies banned in the past like blinding lasers, we can simply decide not to develop them. We urge KAIST to follow this path, and work instead on uses of AI to improve and not harm human lives.

"There are plenty of great things you can do with AI that save lives, including in a military context, but to openly declare the goal is to develop autonomous weapons and have a partner like this sparks huge concern," said Toby Walsh, organiser of the boycott and professor at the University of New South Wales. "This is a very respected university partnering with a very ethically dubious partner that continues to violate international norms."

Comments »

If you enjoyed this article, please consider sharing it: