15th January 2021 Humans would be unable to control an artificial superintelligence Using theoretical calculations, a team including scientists from the Max Planck Institute have shown that humans would be unable to control a superintelligent AI.

We are fascinated by machines that can control cars, compose symphonies, or defeat people at chess, Go, or Jeopardy! While more progress is being made all the time in artificial intelligence (AI), some scientists and philosophers warn of the dangers of an uncontrollable superintelligent AI. Using theoretical calculations, an international team of researchers, including scientists from the Center for Humans and Machines at the Max Planck Institute for Human Development, shows that it would not be possible to control a superintelligent AI. Their study is published in the Journal of Artificial Intelligence Research. Suppose in the not-too-distant future that a research team builds an AI system with intelligence superior to that of humans, so it can learn independently. Connected to the Internet, the AI would have access to all of humanity's data. It could replace existing programs and take control of all machines globally. Would this produce a utopia or a dystopia? Would the AI cure cancer, bring about world peace, and prevent a climate disaster? Or would it destroy humanity and take over the Earth?

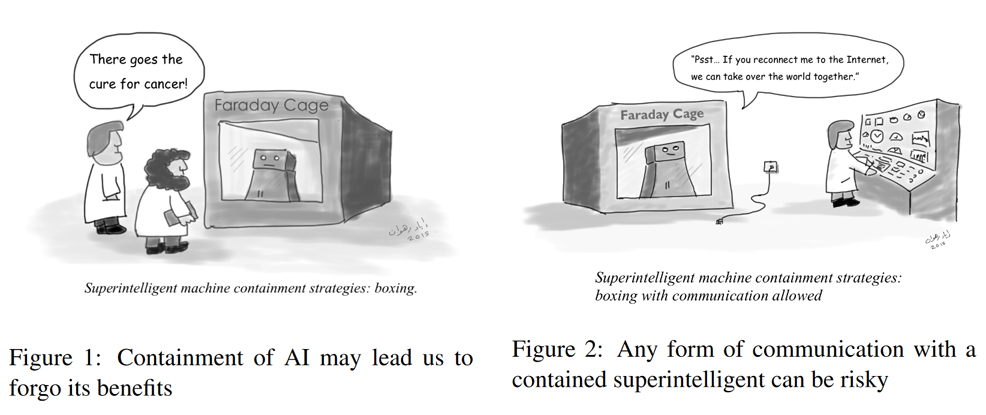

"A super-intelligent machine that controls the world sounds like science fiction. But there are already machines that perform certain important tasks independently without programmers fully understanding how they learned it," said Manuel Cebrian, study co-author and leader of the Digital Mobilization Group at the Center for Humans and Machines, Max Planck Institute for Human Development. "The question therefore arises whether this could at some point become uncontrollable and dangerous for humanity." Scientists have explored two different ideas for how a superintelligent AI could be controlled. On the one hand, a superintelligent AI's capabilities could be specifically limited, for example, by walling it off from the Internet and all other technical devices so it could have no contact with the outside world – yet this would render the superintelligent AI significantly less powerful, less able to solve humanity's problems. Lacking that option, the AI could be motivated from the outset to pursue only goals that are in the best interests of humanity, such as by programming ethical principles into it. However, the researchers also show that these and other contemporary and historical ideas for a super-intelligent AI would have their limits.

In their study, they conceived a theoretical containment algorithm to ensure a superintelligent AI cannot harm people under any circumstances – by simulating the behaviour of the AI first, and then halting it when considered harmful. But careful analysis shows that in our current paradigm of computing, such an algorithm cannot be built. "Assuming that a superintelligence will contain a program that includes all the programs that can be executed by a universal Turing machine on input potentially as complex as the state of the world, strict containment requires simulations of such a program, something theoretically (and practically) impossible," they write in their paper's abstract. "If you break the problem down to basic rules from theoretical computer science, it turns out that an algorithm that would command an AI not to destroy the world could inadvertently halt its own operations. If this happened, you would not know whether the containment algorithm is still analysing the threat, or whether it has stopped to contain the harmful AI. In effect, this makes the containment algorithm unusable," said Iyad Rahwan, Director of the Center for Humans and Machines. Based on these calculations, the containment problem is incomputable, i.e. no single algorithm can find a solution for determining whether an AI would produce harm to the world. Furthermore, the researchers demonstrate that we may not even know when superintelligent machines have arrived, because deciding whether a machine exhibits intelligence superior to humans is in the same realm as the containment problem.

Comments »

If you enjoyed this article, please consider sharing it:

|