22nd March 2023 New card from Nvidia will accelerate AI U.S. chip giant Nvidia has launched the H100 NVL, a next-generation hardware accelerator for large language models.

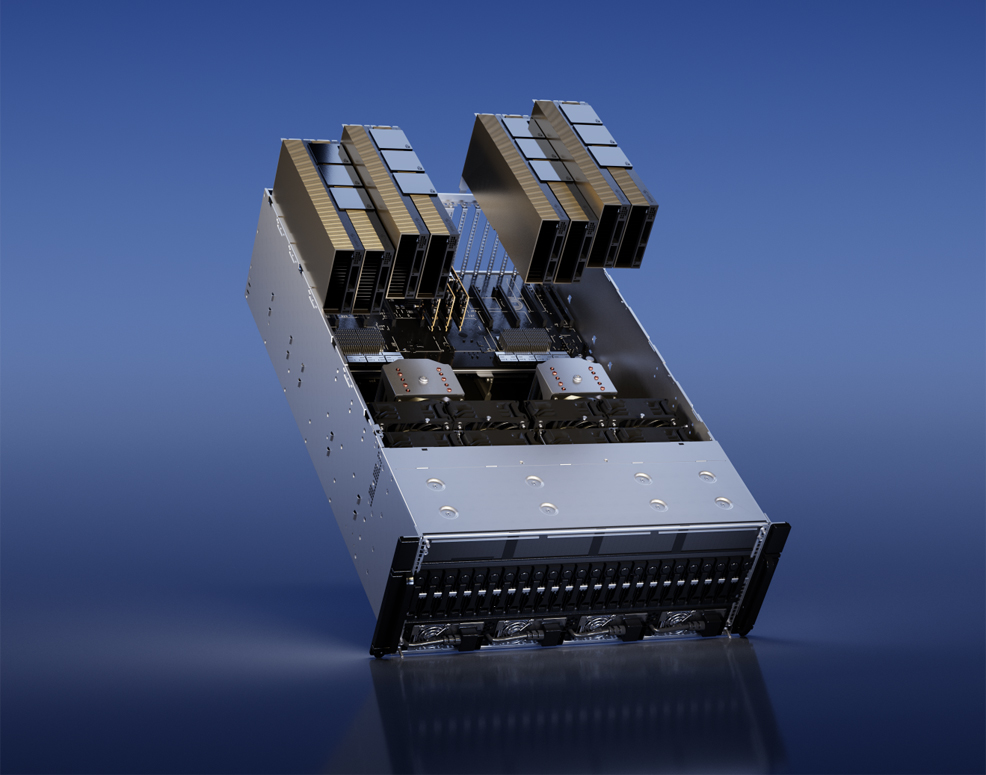

In May 2020, Nvidia released the A100, a graphics processing unit (GPU) designed to accelerate high-performance computing (HPC) workloads. Since then, it has become a popular choice among AI researchers and computer scientists working with large datasets, thanks to its 20x performance improvement over the previous generation of technology. One of the A100's key features is its Tensor Cores, specifically designed to speed up matrix multiplication operations that are crucial for deep learning algorithms. In March 2022, Nvidia announced a successor – the H100. Based on the 4-nanometre (nm) manufacturing process, it featured 80 billion transistors, compared to 54.2 billion found in the A100. This provided six times the speed, double the memory and 3.5 times the energy efficiency. This week, the company has launched a new variant of the H100, called the H100 NVL. While its predecessor has 80GB of memory, the H100 NVL has 94GB and is available in a dual configuration for a total of 188GB. This provides more memory per GPU than any other Nvidia part to date. Combined, these can deliver a massive 7.8 terabytes (TB) of bandwidth per second, compared to a maximum of 3.35TB/sec for the earlier H100. When measured against the older A100 (in tests using 8 x H100 NVLs vs. 8 x A100s), it shows an overall 12x gain in the inference throughput of GPT-3. This will make the H100 NVL a highly attractive option for running the next generation of large language models. "The rise of generative AI is requiring more powerful inference computing platforms," said Jensen Huang, founder and CEO of Nvidia. "The number of applications for generative AI is infinite, limited only by human imagination. Arming developers with the most powerful and flexible inference computing platform will accelerate the creation of new services that will improve our lives in ways not yet imaginable." "Nvidia's new high-performance H100 inference platform can enable us to provide better and more efficient services to our customers with our state-of-the-art generative models, powering a variety of NLP applications such as conversational AI, multilingual enterprise search and information extraction," said the CEO of Canadian startup Cohere, Aidan Gomez. The H100 NVL is scheduled to be available in the second half of this year. Nvidia has yet to reveal a cost, but given its top-end specifications, the product is likely to be on the pricey side. A single H100 can sometimes be found for around $28,000, so a brand new pair of H100 NVLs in a dual configuration could be double or even triple that.

Comments »

If you enjoyed this article, please consider sharing it:

|