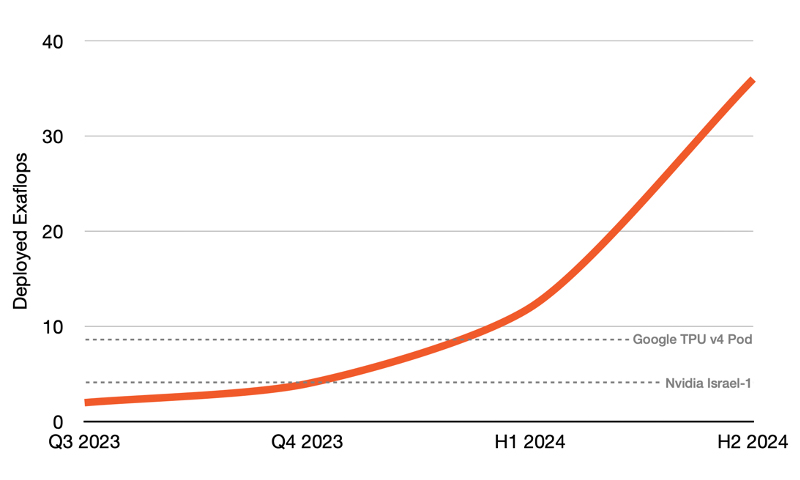

25th July 2023 World's largest supercomputer for training AI revealed Cerebras Systems, in collaboration with G42, has revealed Condor Galaxy – a network of nine interconnected supercomputers, which together will provide up to 36 exaFLOPs for training AI.

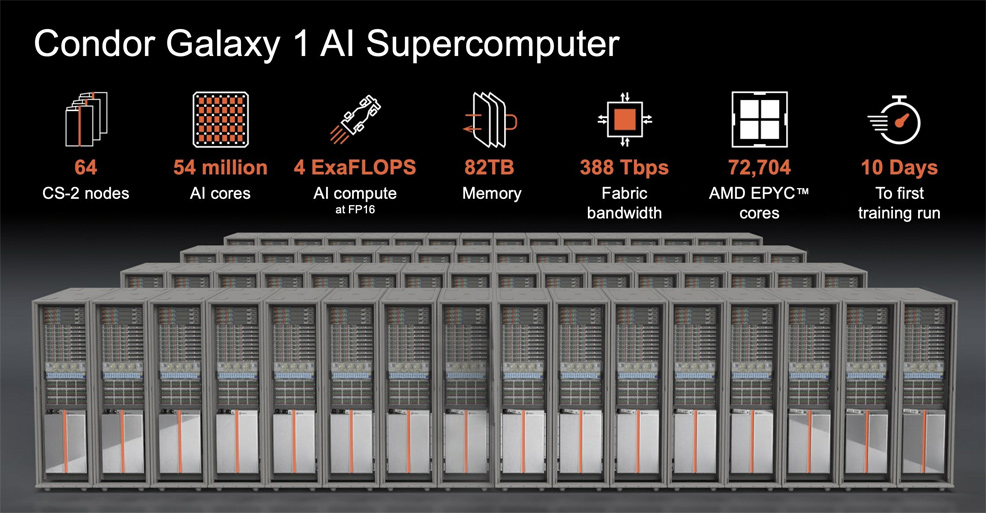

In recent years, and particularly the last nine months, major strides have been achieved in artificial intelligence (AI). Large language models (LLMs) and other subsets of generative AI, for example, have reached unprecedented new heights. Leveraging advancements in machine learning and natural language processing, these programs are now creating realistic and coherent text, images, and music. Alongside this, AI is helping to accelerate research in areas such as medicine. The progress lately has been phenomenal, prompting many in the futurist community to reconsider their predictions for a technological singularity and the likely date of its arrival. In order for such momentum to be sustained, however, AI requires exponential improvements in computer processing power. California-based Cerebras Systems has just unveiled a gigantic new supercomputer, designed for next-generation LLMs, which promises to significantly reduce AI model training times. The Condor Galaxy, named after a real galaxy, will combine nine supercomputers into one. The first, Condor Galaxy 1 (CG1), is being deployed this year, with additional machines following in 2024. CG-1 will feature a massive 54 million cores, 82 terabytes (TB) of memory, and 388 Tbps of fabric bandwidth. Its AI compute will be 4 exaFLOPS, which is a shorthand way of saying four quintillion (4,000,000,000,000,000,000) calculations a second. When the final machine in the series is deployed, the Condor Galaxy will have a combined compute of 36 exaFLOPS.

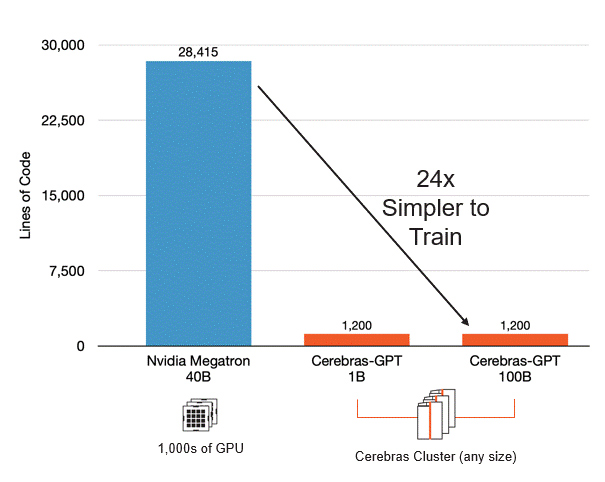

Readers who are more technically savvy will note that this particular machine is based on FP16, or half-precision floating-point format, which uses 16 bits to represent a number. FP16 is less precise than FP32 – and the even more accurate FP64 – used by supercomputers such as Frontier, which last year became the world's first "true" exaFLOP system. However, FP16 is more efficient for certain tasks, such as machine learning and AI, where the slight loss in precision can be an acceptable trade-off for increased speed and lower resource usage, making it a popular choice for these applications. Optimised for large language models and generative AI, CG-1 will include standard support for up to 600 billion parameter models and extendable configurations that enable up to 100 trillion parameters (for comparison, GPT-3 has 175 billion). Cerebras is collaborating with G42, a leading AI and cloud company of the United Arab Emirates (UAE). In 2017, the UAE became the first nation to appoint a Minister for AI in their federal government. Massive investments soon followed, including the establishment of G42 research partner, Mohamed bin Zayed University of Artificial Intelligence (MBZUAI), the first post-graduate university in the world focused entirely on AI. "Collaborating with Cerebras to rapidly deliver the world's fastest AI training supercomputer and laying the foundation for interconnecting a constellation of these supercomputers across the world has been enormously exciting," said Talal Alkaissi, CEO of G42 Cloud, a subsidiary of G42. "This partnership brings together Cerebras' extraordinary compute capabilities, together with G42's multi-industry AI expertise. G42 and Cerebras' shared vision is that Condor Galaxy will be used to address society's most pressing challenges across healthcare, energy, climate action and more." "Delivering 4 exaFLOPs of AI compute at FP16, CG-1 dramatically reduces AI training timelines while eliminating the pain of distributed compute," said the CEO of Cerebras Systems, Andrew Feldman. "Many cloud companies have announced massive GPU clusters that cost billions of dollars to build, but that are extremely difficult to use. Distributing a single model over thousands of tiny GPUs takes months of time from dozens of people with rare expertise. CG-1 eliminates this challenge. Setting up a generative AI model takes minutes, not months, and can be done by a single person. CG-1 is the first of several 4 exaFLOP AI machines to be deployed across the U.S. Over the next year, together with G42, we plan to expand this deployment and stand up a staggering 36 exaFLOPs of efficient, purpose-built AI compute."

Comments »

If you enjoyed this article, please consider sharing it:

|