22nd February 2024 Google reveals next-generation AI model Gemini 1.5 Pro includes a breakthrough in long-context understanding, handling up to 1 million tokens. It can also decipher the content of videos and describe what is happening in a scene.

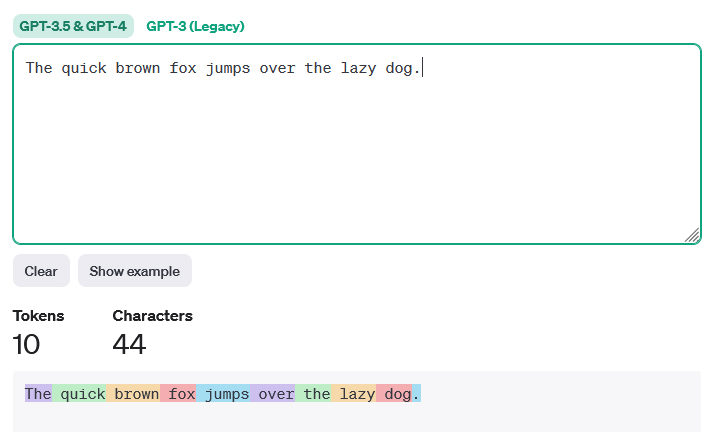

In the world of large language models (LLMs) like ChatGPT, so-called "tokens" are the fundamental units of text that these models process, akin to words, punctuation, or parts of words in human language. When we say a model can handle a certain number of tokens, we're referring to the maximum length of text, including spaces and punctuation, that it can process in a single instance. This limit is crucial in terms of receiving inputs from a user, and then being able to generate coherent and relevant responses, as it determines how much information the artificial intelligence (AI) can work with. In the screenshotted example below (provided by OpenAI's Tokenizer), we can see that the sentence consists of 44 characters and 10 tokens. The full stop at the end of the sentence is counted as one token.

Token numbers in LLMs have increased rapidly over the last several years. GPT-3, developed in 2020 as the precursor to ChatGPT, had a maximum input size of 2,048 tokens, including both a user's prompt and the generated response. ChatGPT itself (based on GPT-3.5) doubled this to 4,096 and the more advanced GPT-4 came in two versions, offering 8,192 and 32,768. Upon its launch in March 2023, users noted the marked improvement of GPT-4, now able to handle very large blocks of text and web code, while giving more nuanced and helpful responses. Other AI companies have been expanding their model input/output sizes. This includes Google, which announced the Gemini series of LLMs at the end of last year offering up to 32,000 tokens. Google claimed that Gemini Ultra, the most powerful version, could outperform GPT-4 in a wide variety of tests. OpenAI launched the even more powerful GPT-4 Turbo, with up to a massive 128,000 tokens, equivalent to a full-length book of around 300 pages. Claude 2.1, released in the same month by startup Anthropic, supported 200,000 tokens, the equivalent of more than 500 pages. In the race to develop ever larger model sizes, Google now appears to have taken the lead, with a major upgrade of Gemini, known as Gemini 1.5 Pro. While its standard context window is 128,000 tokens, Google is allowing a small number of customers to experiment with up to a million tokens, or nearly eight times the size of GPT-4 Turbo. Furthermore, Google has been researching the model's performance with an astonishing 10 million tokens, more than 300 times larger than both its predecessor and the standard GPT-4.

According to Google, this "step change" incorporates a new architecture and mixture-of-experts (MoE) approach. The MoE architecture is a departure from the traditional Transformer architecture used in Gemini 1.0 and other AI models. It enhances the model's efficiency by allowing it to specialise, with different groups of neural networks becoming "experts" in specific tasks. "Longer context windows show us the promise of what is possible," said Google and Alphabet CEO, Sundar Pichai. "They will enable entirely new capabilities and help developers build much more useful models and applications. We are excited to offer a limited preview of this experimental feature to developers and enterprise customers." Like its predecessor, Gemini 1.5 Pro is natively multimodal, capable of understanding and responding to text, images, audio, and video inputs. It can study up to an hour of silent video, 11 hours of audio, 30,000 lines of code, or 700,000 words. An example of its capabilities can be seen in the video demonstration below. The AI is tasked with analysing a 44-minute silent Buster Keaton movie and summarising the film's plot, answering a question about the writing on a slip of paper that appears partway through it, and pinpointing the moment represented by another hand-drawn sketch. For more details, see the Gemini 1.5 Pro technical report.

Comments »

If you enjoyed this article, please consider sharing it:

|

||||||