22nd March 2024 New AI chips feature 208 billion transistors Chip giant NVIDIA has launched its Blackwell family of processors, which are set to provide yet another leap in scale and speed for AI.

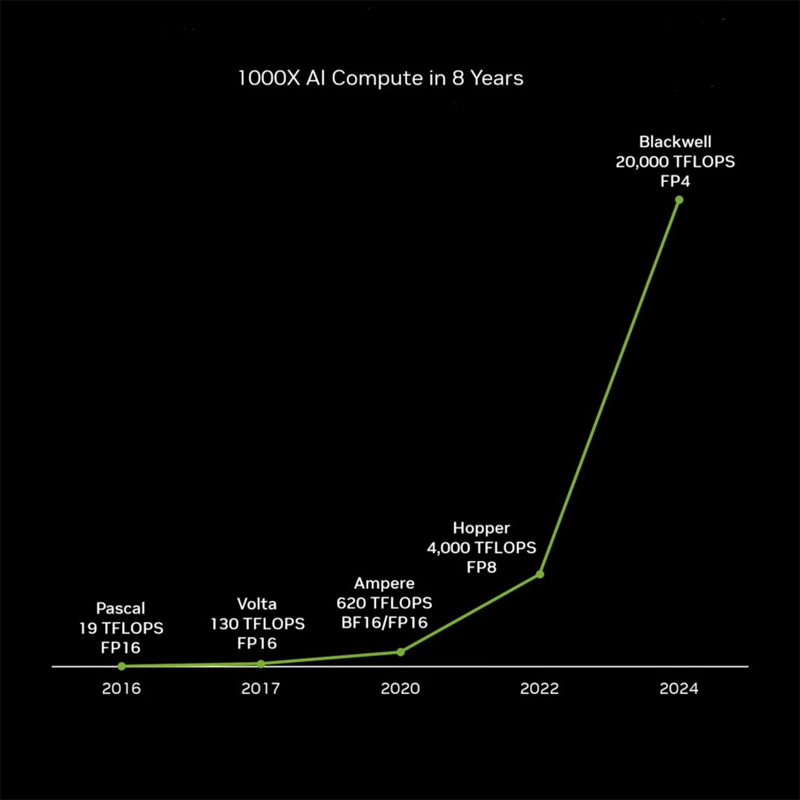

NVIDIA has been at the forefront of the current tech boom, with its graphics processing units (GPUs) not only transforming video games and professional visuals but also becoming the backbone of artificial intelligence (AI) research and development. At the GTC conference in San Jose this week, the company revealed its latest innovations. One of the major highlights proved to be Blackwell, a GPU architecture featuring six new technologies. These next-generation chips – the world's fastest GPUs – will maintain the exponential growth of computing and generative AI. "For three decades we've pursued accelerated computing, with the goal of enabling transformative breakthroughs like deep learning and AI," said Jensen Huang, founder and CEO of NVIDIA. "Generative AI is the defining technology of our time. Blackwell is the engine to power this new industrial revolution. Working with the most dynamic companies in the world, we will realise the promise of AI for every industry." Blackwell GPUs are packed with 104 billion transistors on each die, unified as one chip with 208 billion transistors. This number is 35% greater than AMD's recent MI300X accelerators – which held the previous record for transistor count – and is 2.6 times more than NVIDIA's current flagship device, the H200. They are manufactured using a custom-built 4 nanometre (nm) process developed by Taiwan Semiconductor Manufacturing Company (TSMC). In addition to its massive transistor count, Blackwell has more memory (192 GB) compared to the H200 (141 GB) and H100 (94 GB) Hopper architectures and features new 4-bit floating point (FP4) AI inference capabilities. When measured by floating point operations per second (FLOPS), this means it delivers five times the performance of Hopper and 1,000 times that of 2016's Pascal.

And of course, these processors can be stacked together, to combine and further enhance their capabilities. As well as the standard version, NVIDIA will be offering two larger configurations: a Blackwell "superchip" and a rack-scale design, both pictured below. The first of these – known as the GB200 Grace Blackwell Superchip – consists of twin Blackwell GPUs, alongside a Grace CPU. This results in 40 petaFLOPS of processing power (FP4) and 16 TB/s of GPU bandwidth. The second is a much larger configuration – known as GB200 NVL72 – consisting of 72 Blackwell GPUs, alongside 36 Grace CPUs. This option will provide a colossal 1,440 petaFLOPS and 576 TB/s of GPU bandwidth, supporting models with up to 10 trillion parameters, which is an order of magnitude greater than the biggest AI systems of today.

25x energy efficiency

In recent years, energy efficiency has become an increasing concern within the technology sector. Liquid-cooled GB200 NVL72 racks are designed to reduce the carbon footprint and energy consumption of a data centre. The liquid cooling increases compute density, reduces the amount of floor space needed, and enables high‑bandwidth, low‑latency GPU communication. Compared to NVIDIA's H100 air-cooled infrastructure, GB200 racks can deliver 25X more performance at the same power while reducing water consumption. Among the many organisations expected to adopt the Blackwell processors are Amazon Web Services, Dell Technologies, Google, Meta, Microsoft, OpenAI, Oracle, Tesla and xAI. The first shipments are expected in 2024, although ramping up to significant volumes will take until 2025, according to NVIDIA. Each is likely to cost between $30,000 and $40,000. "We are committed to offering our customers the most advanced infrastructure to power their AI workloads," said Satya Nadella, the executive chairman and CEO of Microsoft. "By bringing the GB200 Grace Blackwell processor to our data centres globally, we are building on our long-standing history of optimising NVIDIA GPUs for our cloud, as we make the promise of AI real for organisations everywhere." "Blackwell offers massive performance leaps, and will accelerate our ability to deliver leading-edge models," said Sam Altman, CEO of OpenAI, the company behind ChatGPT. "We're excited to continue working with NVIDIA to enhance AI compute." "The transformative potential of AI is incredible, and it will help us solve some of the world's most important scientific problems," said Demis Hassabis, cofounder and CEO of Google DeepMind. "Blackwell's breakthrough technological capabilities will provide the critical compute needed to help the world's brightest minds chart new scientific discoveries."

Comments »

If you enjoyed this article, please consider sharing it:

|

||||||