14th September 2018 Robots can now pick up any object after inspecting it A breakthrough at MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) could allow robots to perform a variety of useful tasks in homes and workplaces.

For hundreds of thousands of years, humans have been masters of dexterity – a skill that can be largely credited to our vision. Robots, meanwhile, are still catching up. Certainly there's been some progress: for a few decades now, robots in controlled environments like assembly lines have been able to pick up the same object over and over again. More recently, breakthroughs in computer vision have enabled robots to make basic distinctions between objects. Even then, however, the systems don't truly understand objects' shapes, so there's little the robots can do after a quick pick-up. This week, researchers from MIT's Computer Science and Artificial Intelligence Laboratory (CSAIL) announced a key breakthrough: a system that lets robots inspect random objects, and visually understand them enough to accomplish specific tasks without ever having seen them before. The system, called Dense Object Nets (DON), looks at objects as "collections of points" that serve as visual roadmaps. This approach lets robots better understand and manipulate items, and, most importantly, allows them to even pick up a specific object among the clutter of similar ones – a valuable skill for the kinds of machines that companies like Amazon and Walmart use in their warehouses. For example, someone might use DON to get a robot to grab onto a specific spot on an object; say, the tongue of a shoe. From that, it can look at a shoe it has never seen before, and successfully grab its tongue. "Many approaches to manipulation can't identify specific parts of an object across the many orientations that object may encounter," says Lucas Manuelli – a PhD student who wrote a new paper about the system. "For example, existing algorithms would be unable to grasp a mug by its handle, especially if the mug could be in multiple orientations, like upright, or on its side."

The team views potential applications not just in manufacturing settings, but also homes. Imagine giving the system an image of a tidy house, and letting it clean while you're at work, or using an image of dishes so that the system puts your plates away while you're on vacation. What's also noteworthy is that none of the data was actually labelled by humans. Instead, the system is what the team calls "self-supervised," not requiring any human annotations. Two common approaches to robot grasping involve either task-specific learning, or creating a general grasping algorithm. These techniques both have obstacles: task-specific methods are difficult to generalise to other tasks, and general grasping doesn't get specific enough to deal with the nuances of particular tasks like putting objects in specific spots. The DON system, however, essentially creates a series of coordinates on a given object, which serve as a kind of visual roadmap, to give the robot a better understanding of what it needs to grasp, and where.

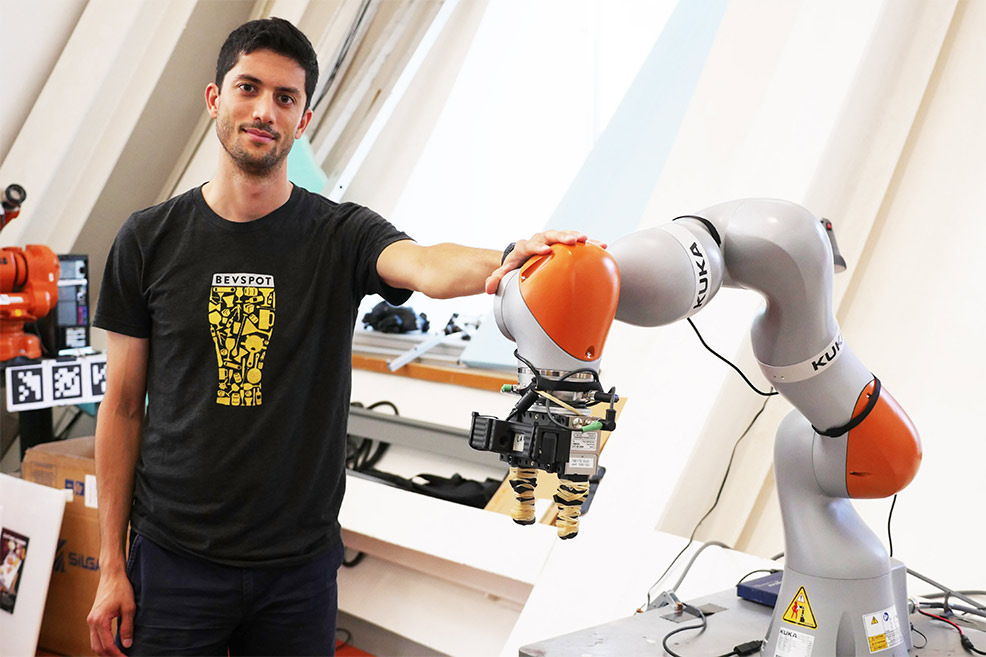

The team trained the system to look at objects as a series of points that make up a larger coordinate system. It can then map different points together to visualise an object's overall 3-D shape, similar to how panoramic photos are stitched together from multiple photos. After training, if a person specifies a point on an object, the robot can take a photo of that object, and then identify and match points to pick up the object at that specified point. This is different from systems like UC-Berkeley's DexNet, which is able to grasp many different items – but is unable to satisfy a specific request. Imagine a child at 18 months old, who doesn't understand which toy you want it to play with but can still grab lots of items, versus a four-year old who can respond to "go grab your truck by the red end of it." In one set of tests done on a soft caterpillar toy, a Kuka robotic arm powered by DON could grasp the toy's right ear from a range of different configurations. This showed that, among other things, the system has the ability to distinguish left from right on symmetrical objects. When testing on a bin of different baseball hats, DON could pick out a specific target hat, despite all of the hats having very similar designs – and having never seen pictures of the hats in training data before. "In factories, robots often need complex part feeders to work reliably," says fellow PhD student and paper co-author, Pete Florence. "But a system like this that can understand objects' orientations could just take a picture and be able to grasp and adjust the object accordingly." Manuelli and Florence will present their paper about the DON system next month at the Conference on Robot Learning in Zürich, Switzerland. In the future, the researchers hope to further improve their system, to the point that it can perform specific tasks with a deeper understanding of the corresponding objects. For example, not only grasping a specific object at a specific point – but actually manipulating that object with the ultimate goal of say, cleaning a desk. Perhaps in the next few decades, the sci-fi image of truly multi-purpose domestic robots may finally become a reality.

Comments »

If you enjoyed this article, please consider sharing it:

|