25th August 2021 World's first brain-scale AI solution Cerebras Systems, a California-based developer of semiconductors and AI, has announced a new system that can support models of 120 trillion parameters in a single computer.

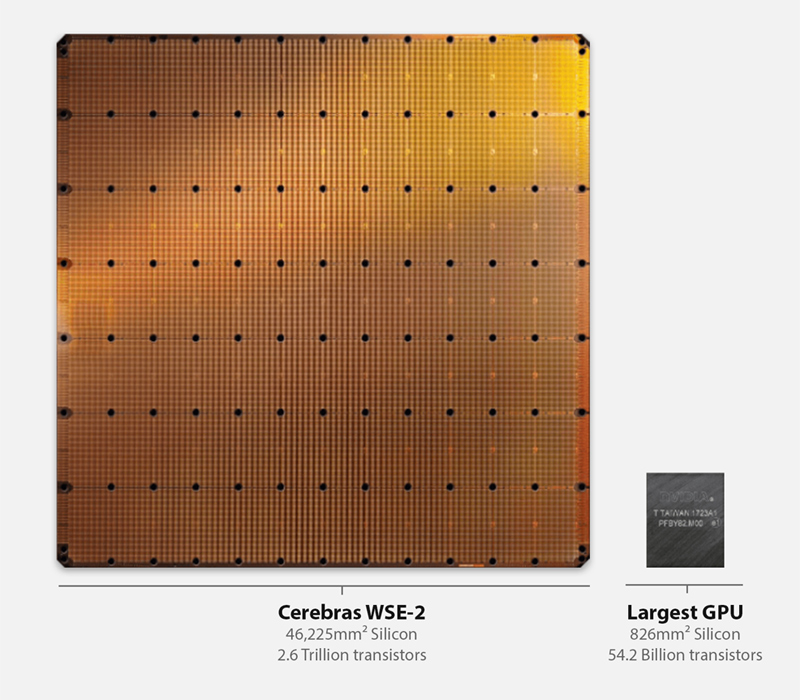

The human brain contains an estimated 100 trillion synapses. To date, the largest AI hardware clusters have been on the order of 1% of human brain scale or about 1 trillion synapse equivalents, called parameters. At only a fraction of full human brain-scale, these clusters of graphics processors consume acres of space and megawatts of power, and require dedicated teams to operate. Cerebras Systems is a company that has previously been mentioned on this blog. In August 2019, they unveiled the largest chip ever made. Known as the Cerebras Wafer Scale Engine, this contained more than 1.2 trillion transistors, about 56 times larger than the largest graphics processing unit (GPU). In keeping with its theme of "bigger means better", Cerebras Systems yesterday announced its latest technology breakthrough – a single computer system to support AI models of greater than 120 trillion parameters in size, more than the raw computational equivalent of a full human brain.

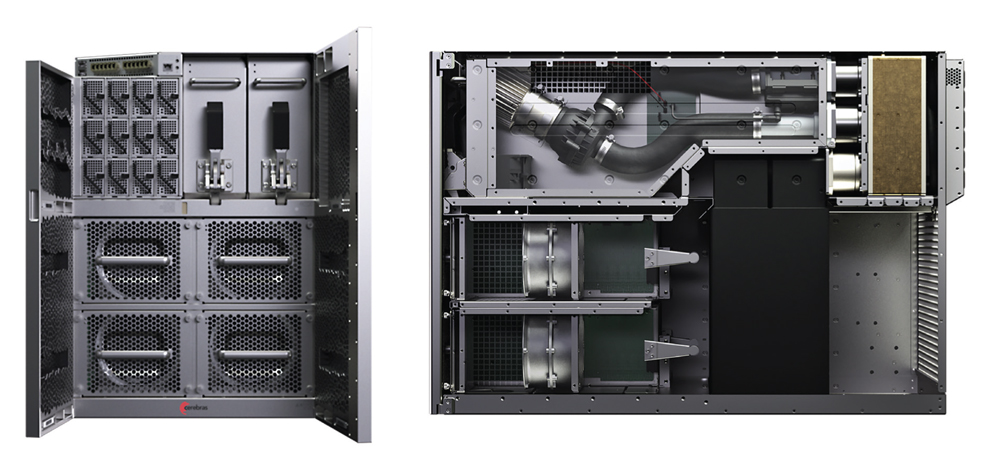

This giant expansion of computational power is accomplished by combining a refrigerator-sized CS-2 accelerator (pictured above) and four new innovations: Cerebras Weight Streaming, a new software execution architecture; Cerebras MemoryX, a memory extension technology; Cerebras SwarmX, a high-performance interconnect fabric technology; and Selectable Sparsity, for dynamic sparsity harvesting. The CS-2 is powered by the second-generation Wafer Scale Engine (WSE-2), each of which contains 2.6 trillion transistors, more than double the number in the 2019 version mentioned earlier. This giant chip also packs 850,000 AI optimised cores and is based on the 7 nanometre (nm) process. The new system will allow users to begin developing brain-scale neural networks and to distribute workloads over enormous clusters of AI-optimised cores with push-button ease. According to Cerebras, it sets a new benchmark in model size, compute cluster horsepower, and programming simplicity at scale.

"Today, Cerebras moved the industry forward by increasing the size of the largest networks possible by 100 times," said the CEO and co-founder of Cerebras, Andrew Feldman. "Larger networks, such as GPT-3, have already transformed the natural language processing (NLP) landscape, making possible what was previously unimaginable. The industry is moving past 1 trillion parameter models, and we are extending that boundary by two orders of magnitude, enabling brain-scale neural networks with 120 trillion parameters." "The last several years have shown us that, for NLP models, insights scale directly with parameters – the more parameters, the better the results," said Associate Director of the Argonne National Laboratory, Rick Stevens. "Cerebras' inventions, which will provide a 100x increase in parameter capacity, may have the potential to transform the industry. For the first time, we will be able to explore brain-sized models – opening up vast new avenues of research and insight." "One of the largest challenges of using large clusters to solve AI problems is the complexity and time required to set up, configure and then optimise them for a specific neural network," said Karl Freund, founder and principal analyst of Cambrian AI. "The Weight Streaming execution model is so elegant in its simplicity, and it allows for a much more fundamentally straightforward distribution of work across the CS-2 clusters' incredible compute resources. With Weight Streaming, Cerebras is removing all the complexity we have to face today around building and efficiently using enormous clusters – moving the industry forward in what I think will be a transformational journey."

Comments »

If you enjoyed this article, please consider sharing it:

|