9th March 2023 Google's PaLM-E – a step towards artificial general intelligence (AGI)? This week, AI researchers at Google have revealed PaLM-E, an embodied multimodal language model with 562 billion parameters.

By now, many if not most of our readers will have used ChatGPT, the publicly available chatbot developed by U.S. company OpenAI. Based on the GPT-3 family of large language models, its powerful capabilities have made headlines around the world. Although far from perfect, the program's 175 billion parameters can generate detailed responses and articulate answers across many domains of knowledge. With subsequent models likely to be even larger and more sophisticated, the Turing Test now seems highly likely to be passed by the end of this decade, or sooner. However, a wide gulf exists between human-like chatbots – which can be thought of as weak or "narrow" AI – and the far more profound milestone of artificial general intelligence (AGI) or "strong" AI. Despite the impressive performance of ChatGPT, it belongs to the former category. Future AI models will need to satisfy a number of criteria before they can be considered AGI. Among these are the ability to recall previously learned information and apply it to new and different tasks. True AGI will demonstrate an ability to learn practically anything, even from experiences its creators never programmed it to handle, solving most problems as easily as a human. Finally, it can be argued that AGI requires embodied cognition. In other words, physical presence in the form of a robotic body, to fully interact with real-world environments. While androids comparable in quality to Data from Star Trek may be a distant prospect, the milestone of AGI does not necessarily require a machine to be indistinguishable from a human in appearance. This week, a team from Google and the Technical University of Berlin has revealed what might be considered an early or proto-AGI. This combines a multimodal visual-language model (VLM) within a mobile robot, able to sense and explore the world around it. PaLM-E, as the system is called, is the largest VLM ever developed, with 562 billion parameters (more than triple that of ChatGPT). It can gather a continuous stream of real-world data, such as objects and colours, feeding that information into a powerful language model. In doing so, it establishes a link between words and visual scenes. This enables PaLM-E to learn from past experiences and conduct new tasks without the need for retraining.

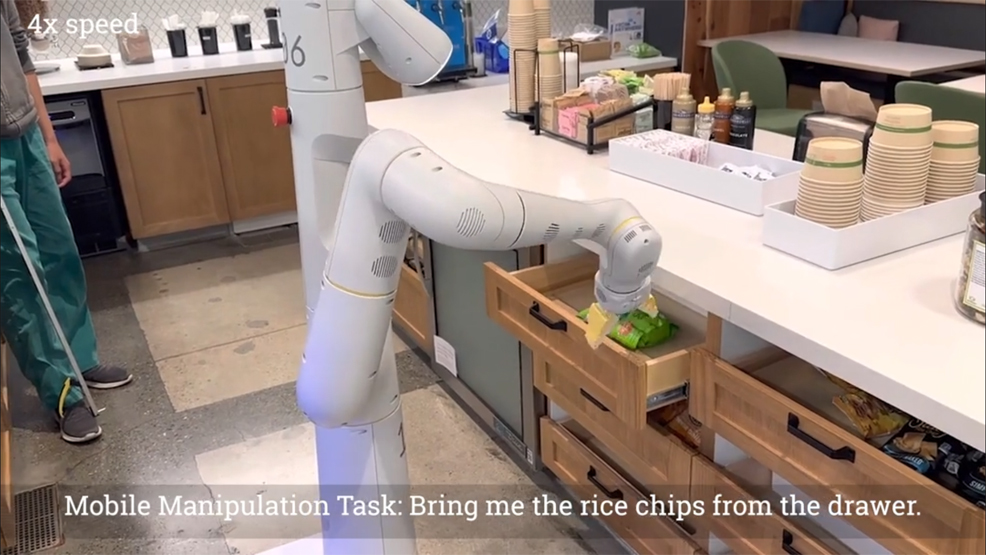

In one demo video, PaLM-E responds to the command "Bring me the rice chips from the drawer," which includes multiple planning steps, as well as incorporating visual feedback from the robot's camera. It continues to perform this task even when interrupted by a human researcher who grabs the rice chips and alters the bag's location. In another test, the same robot completes the order "Bring me a green star," an object it has never seen before. Further examples of learning are presented by the researchers. In one case, the instruction is to "push red blocks to the coffee cup". The dataset contains only three demonstrations with a coffee cup in them, none including red blocks. In another generalisation task, the robot is able to "push green blocks to the turtle" even though it has never seen the turtle before. A more detailed analysis is provided by the teams' paper, in which they confirm that PaLM-E is exhibiting "positive transfer" – which means it can transfer knowledge and skills learned from previous tasks to new ones, leading to higher performance than single-task robot models. "PaLM-E, in addition to being trained on robotics tasks, is a visual-language generalist with state-of-the-art performance on OK-VQA, and retains generalist language capabilities with increasing scale," says the team. "Scaling up the language model size leads to significantly less catastrophic forgetting while becoming an embodied agent. Our model showcases emergent capabilities like multimodal chain of thought reasoning, and the ability to reason over multiple images, despite training on only single-image prompts." According to Google, the next phase of research will involve testing applications for settings such as home automation and industrial robotics. The team also hopes that their work will inspire more research into multimodal reasoning and embodied AI.

Comments »

If you enjoyed this article, please consider sharing it:

|